Simple Page Table

In the previous chapter, we enabled loading the Ymir ELF image from the filesystem into memory. Ideally, we would like to load the kernel right after that, but to do so, we need to manipulate the page table to map the virtual addresses requested by the ELF file. Since the Surtr bootloader only needs to manipulate page tables to load kernel image, this chapter focuses on implementing the minimal necessary page table operations.

important

Source code for this chapter is in whiz-surtr-simple_pg branch.

Table of Contents

Creating the arch Directory

Page table structures and operations heavily depend on the CPU architecture. In this series, we only support x86-64, but even so, architecture-specific code should be organized into separate layers.

Create an x86 directory inside the arch directory, resulting in the following structure:

> tree ./surtr

./surtr

├── arch

│ └── x86

│ └── arch.zig

├── arch.zig

├── boot.zig

└── log.zig

arch.zig: This file is used directly fromboot.zig. It provides an API that abstracts architecture-dependent concepts as much as possible, preventing direct access to the APIs provided by files underarch/.arch/x86/arch.zig: The root file that exports x86-64 specific APIs and related functionality.

In /arch.zig, the code under arch/ is exported according to the target architecture as follows:

const builtin = @import("builtin");

pub usingnamespace switch (builtin.target.cpu.arch) {

.x86_64 => @import("arch/x86/arch.zig"),

else => @compileError("Unsupported architecture."),

};

builtin.target.cpu.arch corresponds to the target CPU architecture specified by .cpu_arch in build.zig. Although we only have x86_64, this can vary depending on the target if we support other architectures. Since this value is determined at compile time, the switch statement is also evaluated at compile time, and the root file for the corresponding architecture is exported.

info

usingnamespace is a feature that brings all the fields of a specified struct into the current scope. In this case, if you simply use @import("arch/x86/arch.zig"), the caller would need to specify an extra field domain as follows:

// -- surtr/arch.zig --

pub const impl = @import("arch/x86/arch.zig");

// -- surtr/boot.zig --

const arch = @import("arch.zig");

arch.impl.someFunction();

By using usingnamespace, you can eliminate this extra level, making the code cleaner:

// -- surtr/arch.zig --

pub usingnamespace @import("arch/x86/arch.zig");

// -- surtr/boot.zig --

const arch = @import("arch.zig");

arch.someFunction();

Although it might look somewhat like black magic, rest assured that usingnamespace cannot import struct fields directly into the top-level scope of the current file:

usingnamespace @import("some.zig"); // someFunction() が定義されているファイル

someFunction(); // このようなことはできない

arch/x86/arch.zig defines files containing architecture-dependent code that should be accessible from /arch or higher levels. Since we want to implement page tables, we will create arch/x86/page.zig and then export it from arch/x86/arch.zig.

pub const page = @import("page.zig");

With this setup, you can now access x64 paging-related functionality directly from boot.zig.

Page Table Entry

From here, we will enable page table manipulation. When control is transferred from UEFI FW to Surtr, UEFI has already switched to 64-bit mode and constructed the initial page tables. Let's check the memory map using the vmmap command of gef. Since -s is specified as the QEMU startup command in build.zig, you can attach to the GDB server on port 1234. Currently, Surtr enters an infinite loop at the end of main(), so you can attach with GDB during this time:

Memory Map

gef> target remote:1234

gef> vmmap

--------------------------------------- Memory map ---------------------------------------

Virtual address start-end Physical address start-end Total size Page size Count Flags

0x0000000000000000-0x0000000000200000 0x0000000000000000-0x0000000000200000 0x200000 0x200000 1 [RWX KERN ACCESSED DIRTY]

0x0000000000200000-0x0000000000800000 0x0000000000200000-0x0000000000800000 0x600000 0x200000 3 [RWX KERN ACCESSED]

0x0000000000800000-0x0000000000a00000 0x0000000000800000-0x0000000000a00000 0x200000 0x200000 1 [RWX KERN ACCESSED DIRTY]

0x0000000000a00000-0x000000001be00000 0x0000000000a00000-0x000000001be00000 0x1b400000 0x200000 218 [RWX KERN ACCESSED]

0x000000001be00000-0x000000001c000000 0x000000001be00000-0x000000001c000000 0x200000 0x200000 1 [RWX KERN ACCESSED DIRTY]

0x000000001c000000-0x000000001e200000 0x000000001c000000-0x000000001e200000 0x2200000 0x200000 17 [RWX KERN ACCESSED]

0x000000001e200000-0x000000001ee00000 0x000000001e200000-0x000000001ee00000 0xc00000 0x200000 6 [RWX KERN ACCESSED DIRTY]

0x000000001ee00000-0x000000001f000000 0x000000001ee00000-0x000000001f000000 0x200000 0x200000 1 [R-X KERN ACCESSED DIRTY]

0x000000001f000000-0x000000001fa00000 0x000000001f000000-0x000000001fa00000 0xa00000 0x200000 5 [RWX KERN ACCESSED DIRTY]

0x000000001fa00000-0x000000001fac6000 0x000000001fa00000-0x000000001fac6000 0xc6000 0x1000 198 [RWX KERN ACCESSED DIRTY]

0x000000001fac6000-0x000000001fac7000 0x000000001fac6000-0x000000001fac7000 0x1000 0x1000 1 [RW- KERN ACCESSED DIRTY]

0x000000001fac7000-0x000000001fac8000 0x000000001fac7000-0x000000001fac8000 0x1000 0x1000 1 [R-X KERN ACCESSED DIRTY]

0x000000001fac8000-0x000000001faca000 0x000000001fac8000-0x000000001faca000 0x2000 0x1000 2 [RW- KERN ACCESSED DIRTY]

0x000000001faca000-0x000000001facb000 0x000000001faca000-0x000000001facb000 0x1000 0x1000 1 [R-X KERN ACCESSED DIRTY]

0x000000001facb000-0x000000001facd000 0x000000001facb000-0x000000001facd000 0x2000 0x1000 2 [RW- KERN ACCESSED DIRTY]

0x000000001facd000-0x000000001facf000 0x000000001facd000-0x000000001facf000 0x2000 0x1000 2 [R-X KERN ACCESSED DIRTY]

0x000000001facf000-0x000000001fad1000 0x000000001facf000-0x000000001fad1000 0x2000 0x1000 2 [RW- KERN ACCESSED DIRTY]

0x000000001fad1000-0x000000001fad2000 0x000000001fad1000-0x000000001fad2000 0x1000 0x1000 1 [R-X KERN ACCESSED DIRTY]

0x000000001fad2000-0x000000001fad4000 0x000000001fad2000-0x000000001fad4000 0x2000 0x1000 2 [RW- KERN ACCESSED DIRTY]

0x000000001fad4000-0x000000001fadb000 0x000000001fad4000-0x000000001fadb000 0x7000 0x1000 7 [R-X KERN ACCESSED DIRTY]

0x000000001fadb000-0x000000001fade000 0x000000001fadb000-0x000000001fade000 0x3000 0x1000 3 [RW- KERN ACCESSED DIRTY]

0x000000001fade000-0x000000001fadf000 0x000000001fade000-0x000000001fadf000 0x1000 0x1000 1 [R-X KERN ACCESSED DIRTY]

0x000000001fadf000-0x000000001fae2000 0x000000001fadf000-0x000000001fae2000 0x3000 0x1000 3 [RW- KERN ACCESSED DIRTY]

0x000000001fae2000-0x000000001fae3000 0x000000001fae2000-0x000000001fae3000 0x1000 0x1000 1 [R-X KERN ACCESSED DIRTY]

0x000000001fae3000-0x000000001fae6000 0x000000001fae3000-0x000000001fae6000 0x3000 0x1000 3 [RW- KERN ACCESSED DIRTY]

0x000000001fae6000-0x000000001fae7000 0x000000001fae6000-0x000000001fae7000 0x1000 0x1000 1 [R-X KERN ACCESSED DIRTY]

0x000000001fae7000-0x000000001faea000 0x000000001fae7000-0x000000001faea000 0x3000 0x1000 3 [RW- KERN ACCESSED DIRTY]

0x000000001faea000-0x000000001faeb000 0x000000001faea000-0x000000001faeb000 0x1000 0x1000 1 [R-X KERN ACCESSED DIRTY]

0x000000001faeb000-0x000000001faed000 0x000000001faeb000-0x000000001faed000 0x2000 0x1000 2 [RW- KERN ACCESSED DIRTY]

0x000000001faed000-0x000000001fc00000 0x000000001faed000-0x000000001fc00000 0x113000 0x1000 275 [RWX KERN ACCESSED DIRTY]

0x000000001fc00000-0x000000001fe00000 0x000000001fc00000-0x000000001fe00000 0x200000 0x200000 1 [R-X KERN ACCESSED DIRTY]

0x000000001fe00000-0x0000000020000000 0x000000001fe00000-0x0000000020000000 0x200000 0x1000 512 [RWX KERN ACCESSED DIRTY]

0x0000000020000000-0x0000000040000000 0x0000000020000000-0x0000000040000000 0x20000000 0x200000 256 [RWX KERN ACCESSED]

0x0000000040000000-0x0000000080000000 0x0000000040000000-0x0000000080000000 0x40000000 0x40000000 1 [RWX KERN ACCESSED]

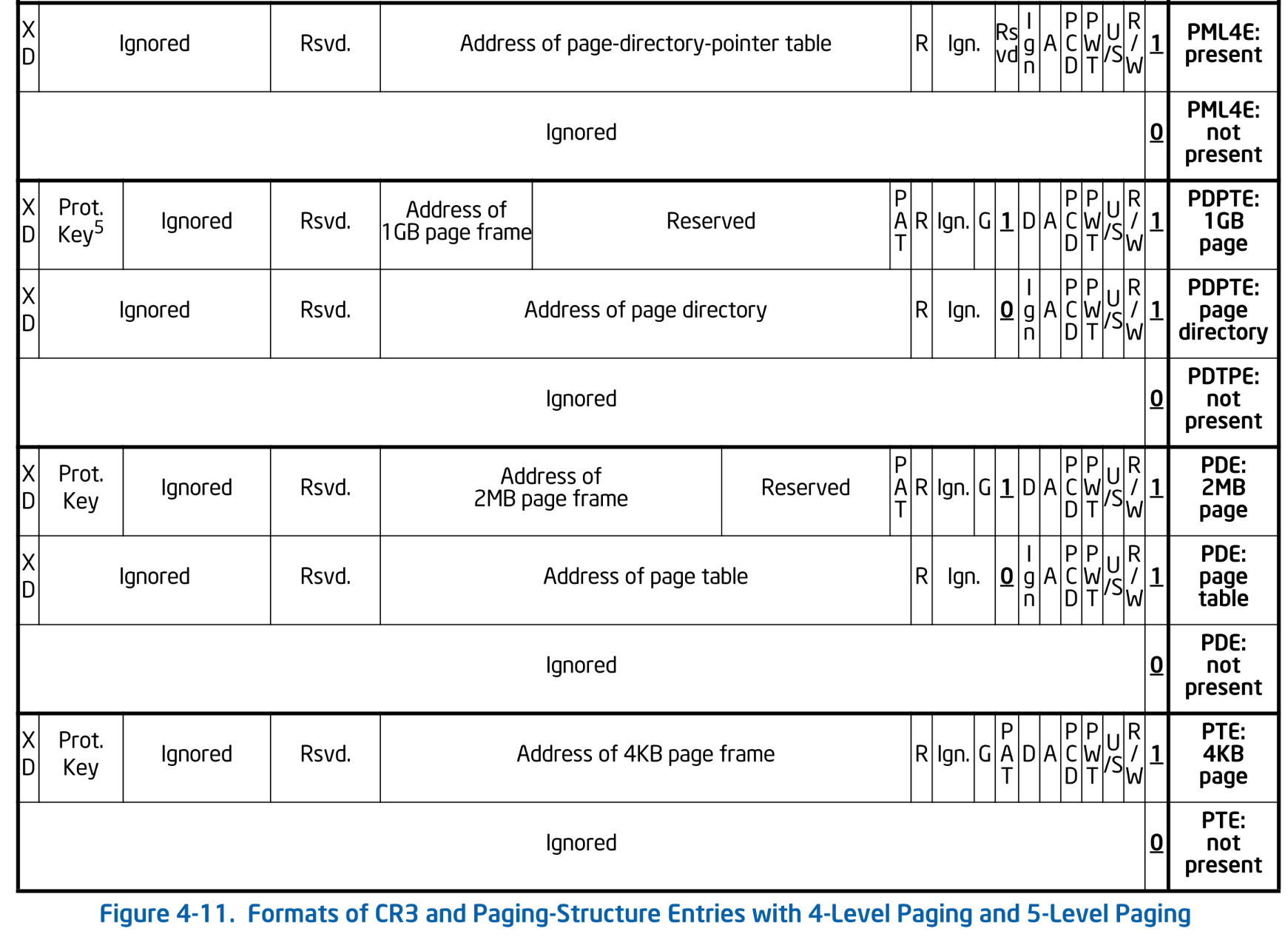

The page tables prepared by UEFI map virtual addresses directly to same physical addresses. While the full-fledged page table setup will be handled by Ymir, Surtr will only perform a simple paging configuration. In this series, we adopt 4-level paging. The page table entries at each level of 4-level paging have the following structure:

Formats of CR3 and Paging-Structure Entries with 4-Level Paging. SDM Vol.3A 4.5.5

Formats of CR3 and Paging-Structure Entries with 4-Level Paging. SDM Vol.3A 4.5.5

There are four types of entries, which Intel refers to as PML4E, PDPTE, PDE, and PTE. The naming varies depending on the software; for example, Linux calls them PGD, PUD, PMD, and PTE. Since these names can be unintuitive, in this series we will refer to them as Lv4, Lv3, Lv2, and Lv1 respectively.

First, let's define structures representing each of the four entries. As you can see from the diagram above, all four entries share a very similar structure1. Therefore, we define a function called EntryBase() that returns a type, and then use it to define the four entries as follows:

const TableLevel = enum { lv4, lv3, lv2, lv1 };

fn EntryBase(table_level: TableLevel) type {

return packed struct(u64) {

const Self = @This();

const level = table_level;

/// Present.

present: bool = true,

/// Read/Write.

/// If set to false, write access is not allowed to the region.

rw: bool,

/// User/Supervisor.

/// If set to false, user-mode access is not allowed to the region.

us: bool,

/// Page-level write-through.

/// Indirectly determines the memory type used to access the page or page table.

pwt: bool = false,

/// Page-level cache disable.

/// Indirectly determines the memory type used to access the page or page table.

pcd: bool = false,

/// Accessed.

/// Indicates whether this entry has been used for translation.

accessed: bool = false,

/// Dirty bit.

/// Indicates whether software has written to the 2MiB page.

/// Ignored when this entry references a page table.

dirty: bool = false,

/// Page Size.

/// If set to true, the entry maps a page.

/// If set to false, the entry references a page table.

ps: bool,

/// Ignored when CR4.PGE != 1.

/// Ignored when this entry references a page table.

/// Ignored for level-4 entries.

global: bool = true,

/// Ignored

_ignored1: u2 = 0,

/// Ignored except for HLAT paging.

restart: bool = false,

/// When the entry maps a page, physical address of the page.

/// When the entry references a page table, 4KB aligned address of the page table.

phys: u51,

/// Execute Disable.

xd: bool = false,

};

}

const Lv4Entry = EntryBase(.lv4);

const Lv3Entry = EntryBase(.lv3);

const Lv2Entry = EntryBase(.lv2);

const Lv1Entry = EntryBase(.lv1);

In Zig, functions can return types, enabling a feature somewhat similar to templates in C++. The EntryBase() function takes an enum called TableLevel, which corresponds to the entry's level, and returns a struct representing the page table entry for that level. The passed argument can be used as a constant within the returned struct. Additionally, by declaring it as a packed struct(u64), we ensure the total size of the fields is exactly 64 bits2.

Finally, by calling EntryBase() for each LvXEntry, we define the four types of page table entries. This is similar to template instantiation in C++. Since these are resolved at compile time, there is no runtime overhead.

We add a method to this struct to retrieve the physical address pointed to by the entry, which can be either the next-level page table or the physical page itself:

pub const Phys = u64;

pub const Virt = u64;

pub inline fn address(self: Self) Phys {

return @as(u64, @intCast(self.phys)) << 12;

}

Phys and Virt represent physical and virtual address types, respectively. We often risk mixing up physical and virtual addresses3 when implementing paging functions, so these types clearly indicate whether an address is physical or virtual to prevent such mistakes4. The address() function is a helper that converts its internal phys field to by shifting it to produce the physical address. The returned physical address corresponds to either the physical address of the mapped page if the entry maps a page (.ps == true), or the physical address of the referenced page table if the entry points to another page table (.ps == false).

Next, define a function to create a page table entry. It is straightforward when creating an entry that maps a page:

pub fn newMapPage(phys: Phys, present: bool) Self {

if (level == .lv4) @compileError("Lv4 entry cannot map a page");

return Self{

.present = present,

.rw = true,

.us = false,

.ps = true,

.phys = @truncate(phys >> 12),

};

}

To map a page, set .ps to true and specify the physical address of the page to be mapped. Note that Surtr/Ymir does not support 512 GiB pages, so if this function is called for a Lv4Entry (i.e., level == .lv4), it will result in a compile-time error.

Similarly, we define a function to create an entry that references a page table. In this case, the argument is not the physical address of a page, but a pointer to an entry one level lower than itself. To do this, we need to define the "type of the entry one level below." Add the following constant to the struct:

const LowerType = switch (level) {

.lv4 => Lv3Entry,

.lv3 => Lv2Entry,

.lv2 => Lv1Entry,

.lv1 => struct {},

};

If the entry is Lv4Entry, then LowerType is Lv3Entry. Since there is no entry below Lv1Entry, in that case LowerType returns an empty struct. Using this, the function to create an entry that references a page table looks like this:

pub fn newMapTable(table: [*]LowerType, present: bool) Self {

if (level == .lv1) @compileError("Lv1 entry cannot reference a page table");

return Self{

.present = present,

.rw = true,

.us = false,

.ps = false,

.phys = @truncate(@intFromPtr(table) >> 12),

};

}

table is a pointer to the page table that this entry points to. In contrast to the previous case, if the entry itself is an Lv1Entry, this will result in a compile-time error.

Mapping a 4KiB page

With the table entries defined, let's implement the function to actually map pages. Surtr will support only 4KiB pages.

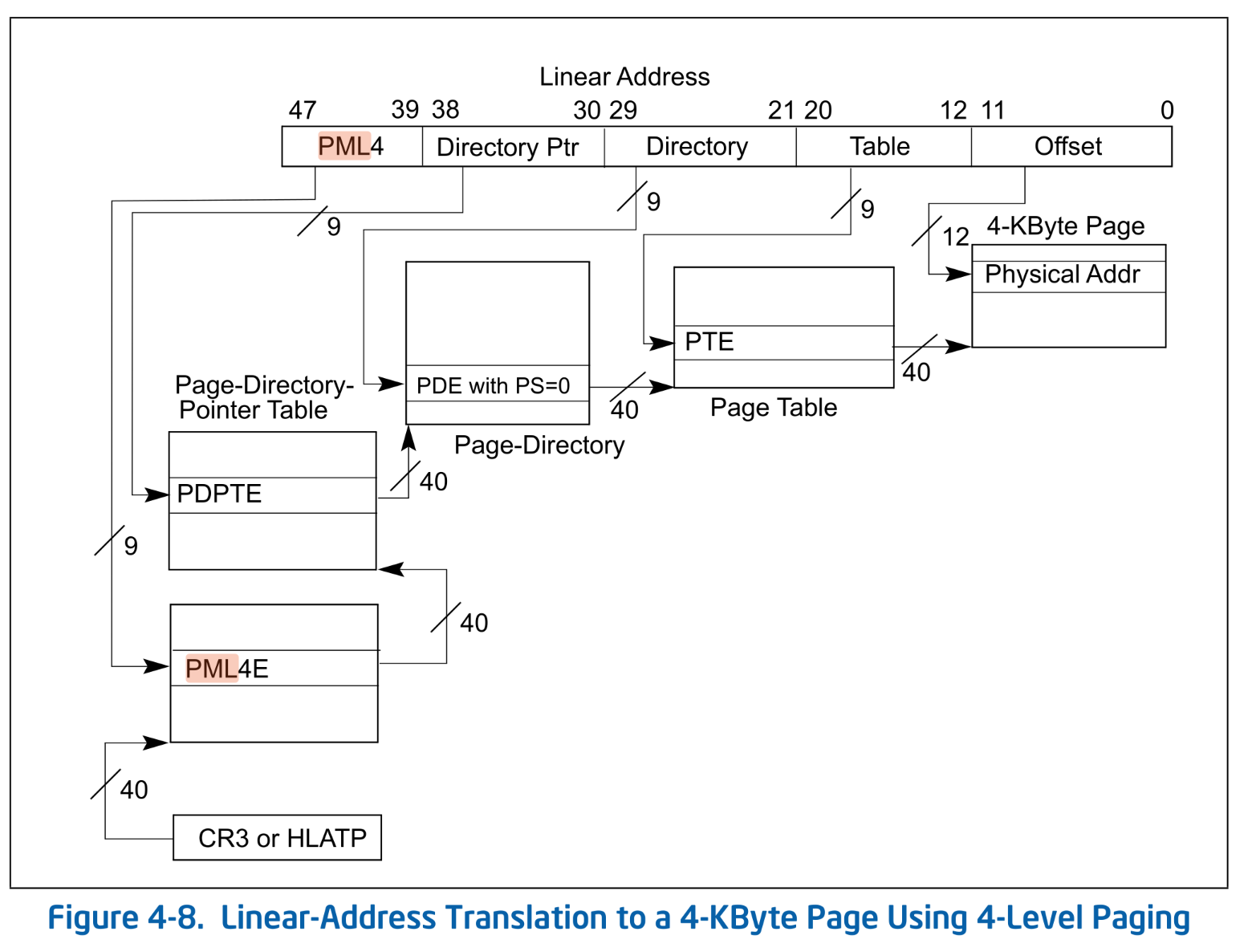

Page walking starts from the Lv4 table stored in the CR3 register, as shown in the diagram5. The bits [47:39] of the virtual address serve as the index into the Lv4 table. By using this index, you obtain the Lv4 entry, which contains a pointer to the Lv3 table. Repeating this process leads you down to the Lv1 entry, which maps the 4KiB page.

Linear-Address Translation to a 4-KByte Page Using 4-Level Paging. SDM Vol.3A 4.5.4

Linear-Address Translation to a 4-KByte Page Using 4-Level Paging. SDM Vol.3A 4.5.4

First, here are the functions to retrieve the page tables at each level:

const page_mask_4k: u64 = 0xFFF;

const num_table_entries: usize = 512;

fn getTable(T: type, addr: Phys) []T {

const ptr: [*]T = @ptrFromInt(addr & ~page_mask_4k);

return ptr[0..num_table_entries];

}

fn getLv4Table(cr3: Phys) []Lv4Entry {

return getTable(Lv4Entry, cr3);

}

fn getLv3Table(lv3_paddr: Phys) []Lv3Entry {

return getTable(Lv3Entry, lv3_paddr);

}

fn getLv2Table(lv2_paddr: Phys) []Lv2Entry {

return getTable(Lv2Entry, lv2_paddr);

}

fn getLv1Table(lv1_paddr: Phys) []Lv1Entry {

return getTable(Lv1Entry, lv1_paddr);

}

getTable() is the internal implementation that takes the type of the page table entry you want to retrieve and the physical address. Since page tables are always 4KiB-aligned, it masks out the lower 12 bits to get the base address of the page table. Then, it returns the table as a slice containing 512 entries starting from that address. The other four functions are helper functions to get the page tables of each respective level.

Next, we implement a function to get the page table entry corresponding to a given virtual address. This function takes the virtual address and the physical address of the page table as inputs:

fn getEntry(T: type, vaddr: Virt, paddr: Phys) *T {

const table = getTable(T, paddr);

const shift = switch (T) {

Lv4Entry => 39,

Lv3Entry => 30,

Lv2Entry => 21,

Lv1Entry => 12,

else => @compileError("Unsupported type"),

};

return &table[(vaddr >> shift) & 0x1FF];

}

fn getLv4Entry(addr: Virt, cr3: Phys) *Lv4Entry {

return getEntry(Lv4Entry, addr, cr3);

}

fn getLv3Entry(addr: Virt, lv3tbl_paddr: Phys) *Lv3Entry {

return getEntry(Lv3Entry, addr, lv3tbl_paddr);

}

fn getLv2Entry(addr: Virt, lv2tbl_paddr: Phys) *Lv2Entry {

return getEntry(Lv2Entry, addr, lv2tbl_paddr);

}

fn getLv1Entry(addr: Virt, lv1tbl_paddr: Phys) *Lv1Entry {

return getEntry(Lv1Entry, addr, lv1tbl_paddr);

}

The internal implementation, getEntry(), first calls the previously defined getTable() to obtain the page table as a slice. Then, following the earlier diagram, it calculates the index of the entry from the virtual address and retrieves the corresponding entry from the table. Similar to getTable(), the remaining four functions are helper functions that instantiate the generic parameter T with specific types.

With this, we are almost ready to retrieve page table entries from virtual addresses. As a helper function, add a wrapper to read the CR3 register in asm.zig:

pub inline fn readCr3() u64 {

var cr3: u64 = undefined;

asm volatile (

\\mov %%cr3, %[cr3]

: [cr3] "=r" (cr3),

);

return cr3;

}

Finally, the function to map a 4KiB page is shown below:

const am = @import("asm.zig");

pub const PageAttribute = enum {

/// RO

read_only,

/// RW

read_write,

/// RX

executable,

};

pub const PageError = error{ NoMemory, NotPresent, NotCanonical, InvalidAddress, AlreadyMapped };

pub fn map4kTo(virt: Virt, phys: Phys, attr: PageAttribute, bs: *BootServices) PageError!void {

const rw = switch (attr) {

.read_only, .executable => false,

.read_write => true,

};

const lv4ent = getLv4Entry(virt, am.readCr3());

if (!lv4ent.present) try allocateNewTable(Lv4Entry, lv4ent, bs);

const lv3ent = getLv3Entry(virt, lv4ent.address());

if (!lv3ent.present) try allocateNewTable(Lv3Entry, lv3ent, bs);

const lv2ent = getLv2Entry(virt, lv3ent.address());

if (!lv2ent.present) try allocateNewTable(Lv2Entry, lv2ent, bs);

const lv1ent = getLv1Entry(virt, lv2ent.address());

if (lv1ent.present) return PageError.AlreadyMapped;

var new_lv1ent = Lv1Entry.newMapPage(phys, true);

new_lv1ent.rw = rw;

lv1ent.* = new_lv1ent;

// No need to flush TLB because the page was not present before.

}

| Argument | Description |

|---|---|

virt | Virtual address |

phys | Physical address |

attr | Attribute of the mapped page |

bs | UEFI Boot Services. This is used to allocate memory for page table data. |

As seen in the previous figure, it starts from CR3 and pagewalks to the Lv1 entry. If the page table does not exists, that is, lvNent.present == false, a new page table is created with allocateNewTable(). Note that this function does not assume the target virtual address is already mapped. In other words, this function only assumes that a new mapping will always be created. Thus, if there is already an existing mapping, it behaves as follows:

- If the virtual address is already mapped to a 4KiB page: return

AlreadyMappederror. - If a virtual address is already mapped to a page of 2MiB or more: overwrite the existing map.

Once it reaches Lv1, it maps the page to the specified physical address using newMapPage(). When it does so, it sets the rw flag according to the attributes of the page. If rw == true, it will be read/write, otherwise it will be read-only.

Note that this function does not need to flush the TLB at the end, since it is only intended to create a new mapping. If you want to modify an existing map, you need to reload CR3 or flush TLB using invlpg or other instructions.

allocateNewTable(), which allocates a new page table, is implemented as follows:

pub const kib = 1024;

pub const page_size_4k = 4 * kib;

fn allocateNewTable(T: type, entry: *T, bs: *BootServices) PageError!void {

var ptr: Phys = undefined;

const status = bs.allocatePages(.AllocateAnyPages, .BootServicesData, 1, @ptrCast(&ptr));

if (status != .Success) return PageError.NoMemory;

clearPage(ptr);

entry.* = T.newMapTable(@ptrFromInt(ptr), true);

}

fn clearPage(addr: Phys) void {

const page_ptr: [*]u8 = @ptrFromInt(addr);

@memset(page_ptr[0..page_size_4k], 0);

}

We use Boot Services' AllocatePages() to allocate only one page. In this case, specify BootServicesData6 as the memory type. This page table will continue to be used even after the execution is transferred from Surtr to Ymir, until she creates a new mapping. In other words, this is not an area that Ymir can free and use as she likes. As described in the later chapter, by setting the memory type to BootServicesData, Surtur is telling Ymir that this area is unavailable until she creates its own new page table.

The allocated page is zero-cleared with clearPage(). Finally, the physical address of the newly created page table is set to the entry.

Making Lv4 Table Writable

We can now map a 4KiB page. Let's check that we can actually map an appropriate virtual address. In boot.zig, map an appropriate address as follows:

const arch = @import("arch.zig");

arch.page.map4kTo(

0xFFFF_FFFF_DEAD_0000,

0x10_0000,

.read_write,

boot_service,

) catch |err| {

log.err("Failed to map 4KiB page: {?}", .{err});

return .Aborted;

};

The result looks like:

[DEBUG] (surtr): Kernel ELF information:

Entry Point : 0x10012B0

Is 64-bit : 1

# of Program Headers: 4

# of Section Headers: 16

!!!! X64 Exception Type - 0E(#PF - Page-Fault) CPU Apic ID - 00000000 !!!!

ExceptionData - 0000000000000003 I:0 R:0 U:0 W:1 P:1 PK:0 SS:0 SGX:0

RIP - 000000001E230E20, CS - 0000000000000038, RFLAGS - 0000000000010206

RAX - 000000001FC01FF8, RCX - 000000001E4DA103, RDX - 0007FFFFFFFFFFFF

RBX - 0000000000000000, RSP - 000000001FE963B0, RBP - 000000001FE96420

RSI - 0000000000000000, RDI - 000000001E284D18

R8 - 0000000000001000, R9 - 0000000000000000, R10 - 0000000000001000

R11 - 000000001FE96410, R12 - 0000000000000000, R13 - 000000001ED8D000

R14 - 0000000000000000, R15 - 000000001FEAFA20

DS - 0000000000000030, ES - 0000000000000030, FS - 0000000000000030

GS - 0000000000000030, SS - 0000000000000030

CR0 - 0000000080010033, CR2 - 000000001FC01FF8, CR3 - 000000001FC01000

A page fault has occurred 7. The address where the page fault occurred is contained in CR2, this time 0x1FC01FF8. This address matches the page pointed to by CR3, i.e., the page containing the Lv4 table. The offset 0xFF8 is the offset of the entry in the table corresponding to the specified virtual address 0xFFFFFF_FFFFFF_DEAD_0000. Let's take a look at the Lv4 table with the vmmap command of gef:

Virtual address start-end Physical address start-end Total size Page size Count Flags

...

0x000000001fc00000-0x000000001fe00000 0x000000001fc00000-0x000000001fe00000 0x200000 0x200000 1 [R-X KERN ACCESSED DIRTY]

...

As you can see from the Flags, it seems that UEFI-provided Lv4 tables are read-only. Since it is not possible to rewrite entries in the Lv4 table, we need to make the Lv4 table writable.

In order to change the attributes of the page on which the Lv4 table resides, the page table entry must be modified. However, that entry itself is now read-only and cannot be modified. It has become a chicken first or egg first kind of problem. It is a deadlock. The only way to make the Lv4 table writable is to copy the Lv4 table itself. Define a function like the following to make the Lv4 table writable:

pub fn setLv4Writable(bs: *BootServices) PageError!void {

var new_lv4ptr: [*]Lv4Entry = undefined;

const status = bs.allocatePages(.AllocateAnyPages, .BootServicesData, 1, @ptrCast(&new_lv4ptr));

if (status != .Success) return PageError.NoMemory;

const new_lv4tbl = new_lv4ptr[0..num_table_entries];

const lv4tbl = getLv4Table(am.readCr3());

@memcpy(new_lv4tbl, lv4tbl);

am.loadCr3(@intFromPtr(new_lv4tbl.ptr));

}

Also, add a helper function loadCr3():

pub inline fn loadCr3(cr3: u64) void {

asm volatile (

\\mov %[cr3], %%cr3

:

: [cr3] "r" (cr3),

);

}

setLv4Writable() allocates a new page table using Boot Services and copies all entries in the Lv4 table. Finally, loadCr3() sets CR3 to the physical address of the newly allocated Lv4 table. Since reloading CR3 flushes all TLBs, the new Lv4 table will be used from now on. The newly created page table is not set to read-only. The Lv4 table is now writable.

Let's make the Lv4 table writable before we map 4KiB page in boot.zig:

arch.page.setLv4Writable(boot_service) catch |err| {

log.err("Failed to set page table writable: {?}", .{err});

return .LoadError;

};

log.debug("Set page table writable.", .{});

The hlt loop should now performed normally without a page fault. The page table at this point looks like this:

Virtual address start-end Physical address start-end Total size Page size Count Flags

0x0000000000000000-0x0000000000200000 0x0000000000000000-0x0000000000200000 0x200000 0x200000 1 [RWX KERN ACCESSED DIRTY]

...

0xffffffffdead0000-0xffffffffdead1000 0x0000000000100000-0x0000000000101000 0x1000 0x1000 1 [RWX KERN ACCESSED GLOBAL]

You can see that the specified virtual address 0xFFFFFFFFFFDEAD0000 is mapped to the physical address 0x100000.

Summary

In this chapter, we defined a structure for page table entries in 4-level paging and implemented a function to map 4KiB pages. When actually mapping 4KiB pages, a page fault occurred because the Lv4 table was read-only. Therefore, we implemented a function to make the Lv4 table writable.

We are now ready to load the Ymir kernel to the requested virtual address. In the next chapter, we will implement functions to actually load the Ymir Kernel.

Strictly speaking, some of the fields in each entry are different, but for this series we will use the same structure for simplicity.

Such a structure is called an integer-backed packed struct. If the total size of the fields is different from the specified size, a compile error will happen.

The page table provided by UEFI is set up so that virtual and physical addresses are the same. So confusing the two will still work.

After all, both are u64 in Zig, and passing u64 where Phys is required will not cause an error. Passing Virt in a place that requires Phys will not give you an error as well. It is used only as an annotation to read the code.

Since segmentation is rarely used in x64, Linear-Address in the figure is almost synonymous with virtual address.

This exception handler is provided by UEFI.