General-Purpose Memory Allocator

In the Page Allocator chapter, we created a page allocator that allocates memory in page-sized units. Since this allocator implements the Allocator interface, it can be used for general-purpose memory allocation. However, because the backend is still a page allocator, the minimum allocated memory size is 4KiB - even when requesting much smaller sizes. In this chapter, we will implement a general-purpose allocator that can efficiently handle small memory allocations. This will allow us to allocate memory with significantly less overhead compared to the page allocator.

important

The source code for this chapter is in whiz-ymir-general_allocator branch.

Table of Contents

- Bin Allocator

- Metadata

- Initialization

- Allocation and Deallocation from Bin

- Implementing the Interface

- Summary

Bin Allocator

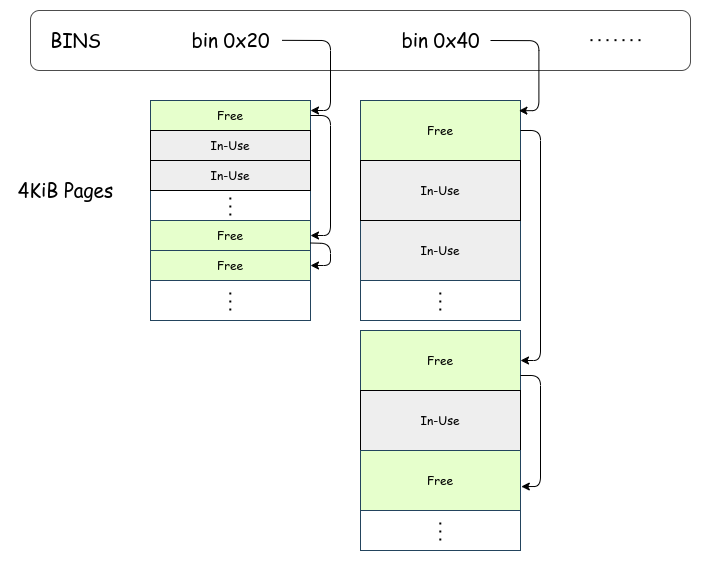

The general-purpose allocator we will implement in this chapter is called BinAllocator1. Like many widely used allocators, it manages fixed-size memory blocks called bins. Below is an overview of Ymir's general-purpose allocator:

Ymir BinAllocator Overview

Ymir BinAllocator Overview

Each bin maintains a linked list of fixed-size regions called chunks. A chunk can be either in-use or free. Free chunks hold a pointer to the next chunk, forming a linked list. The allocator does not track chunks that are currently in use.

Each chunk is created by splitting a 4KiB page. Therefore, a single page contains chunks of only one size. If there are no free chunks available in a bin's list, a new 4KiB page is allocated and divided into fixed-size chunks, which are then added as free chunks.

This allocator has less overhead compared to the page allocator. For example, when allocating a 0x10 byte region, the page allocator wastes 4096 - 16 = 4080 bytes. In contrast, the BinAllocator allocates from the 0x20 bin, reducing the overhead to 32 - 16 = 16 bytes. Of course, the overhead can be further minimized by making the bin sizes more granular.

Metadata

Like PageAllocator, BinAllocator treats the entire file as a single struct. Create a new file named BinAllocator.zig and define the necessary constants and variables:

pub const vtable = Allocator.VTable{

.alloc = allocate,

.free = free,

.resize = resize,

};

const bin_sizes = [_]usize{

0x20, 0x40, 0x80, 0x100, 0x200, 0x400, 0x800,

};

comptime {

if (bin_sizes[bin_sizes.len - 1] > 4096) {

@compileError("The largest bin size exceeds a 4KiB page size");

}

}

To implement the Allocator interface for BinAllocator as well, create a vtable field. The implementations of each function will be described later.

We prepared seven different bin sizes. While it's possible to provide even finer-grained sizes, making bins too small can degrade spatial locality of memory access, so simply increasing granularity isn't always beneficial. In this series, bins larger than the page size are not supported, and this is checked at compile time (comptime). If an allocation request exceeds the page size, it is delegated directly to the PageAllocator, which handles allocations on a page-by-page basis.

A free chunk holds metadata. This metadata contains information necessary for managing bins; the only information required by the BinAllocator is a pointer to the next free chunk. We define a struct to represent this metadata:

/// Heads of the chunk lists.

list_heads: [bin_sizes.len]ChunkMetaPointer,

const ChunkMetaNode = packed struct {

next: ChunkMetaPointer = null,

};

const ChunkMetaPointer = ?*ChunkMetaNode;

ChunkMetaNode contains only a pointer to the next chunk. If there is no next free chunk, it holds null. The list_heads stores pointers to the first chunk of each bin. In the diagram, the upper boxed area corresponds to the list_heads section.

Initialization

BinAllocator holds a PageAllocator as a backend allocator for allocating pages for chunks or handling allocation requests larger than the supported bin sizes. When initializing BinAllocator, it takes a PageAllocator as an argument:

page_allocator: Allocator,

pub fn init(self: *Self, page_allocator: Allocator) void {

self.page_allocator = page_allocator;

@memset(self.list_heads[0..self.list_heads.len], null);

}

During initialization, the head of each bin is set to null, indicating that all bins are empty. Next, we implement a function that allocates a new page and creates chunks when a bin is empty:

fn initBinPage(self: *Self, bin_index: usize) ?void {

const new_page = self.page_allocator.alloc(u8, 4096) catch return null;

const bin_size = bin_sizes[bin_index];

var i: usize = 4096 / bin_size - 1;

while (true) : (i -= 1) {

const chunk: *ChunkMetaNode = @ptrFromInt(@intFromPtr(new_page.ptr) + i * bin_size);

push(&self.list_heads[bin_index], chunk);

if (i == 0) break;

}

}

fn push(list_head: *ChunkMetaPointer, node: *ChunkMetaNode) void {

if (list_head.*) |next| {

node.next = next;

list_head.* = node;

} else {

list_head.* = node;

node.next = null;

}

}

It requests the PageAllocator to allocate a page. The allocated page is divided into chunks of size bin_size, and for each chunk, the following process inside the while loop is executed. Since each chunk is free immediately after creation, it is interpreted as a ChunkMetaNode. By pushing these ChunkMetaNodes onto the corresponding bin list, new chunks are added to the available chunks.

Allocation and Deallocation from Bin

The memory allocation function takes a bin index and retrieves a chunk from that bin:

fn allocFromBin(self: *Self, bin_index: usize) ?[*]u8 {

if (self.list_heads[bin_index] == null) {

initBinPage(self, bin_index) orelse return null;

}

return @ptrCast(pop(&self.list_heads[bin_index]));

}

fn pop(list_head: *ChunkMetaPointer) *ChunkMetaNode {

if (list_head.*) |first| {

list_head.* = first.next;

return first;

} else {

@panic("BinAllocator: pop from empty list");

}

}

If the list for the specified bin is empty, the previously implemented initBinPage() is called to allocate a new page. If the bin has chunks (or after creating new chunks), a chunk is popped from the list and returned to the caller. It's a straightforward process.

Memory deallocation performs the reverse operation of allocation:

fn freeToBin(self: *Self, bin_index: usize, ptr: [*]u8) void {

const chunk: *ChunkMetaNode = @alignCast(@ptrCast(ptr));

push(&self.list_heads[bin_index], chunk);

}

After returning a chunk to a bin, it is possible to free the page if all chunks within that page are free. However, for simplicity, we do not implement such a mechanism.

Implementing the Interface

With the functions for obtaining and returning chunks from bins implemented, we proceed to implement the functions required by the Allocator interface:

allocate

Unlike PageAllocator, BinAllocator may allocate regions smaller than the page size. Therefore, BinAllocator needs to respect the requested alignment. The requested alignment is passed as the third argument to the allocate() function:

fn allocate(ctx: *anyopaque, n: usize, log2_align: u8, _: usize) ?[*]u8 {

const self: *Self = @alignCast(@ptrCast(ctx));

const ptr_align = @as(usize, 1) << @as(Allocator.Log2Align, @intCast(log2_align));

const bin_index = binIndex(@max(ptr_align, n));

if (bin_index) |index| {

return self.allocFromBin(index);

} else {

const ret = self.page_allocator.alloc(u8, n) catch return null;

return @ptrCast(ret.ptr);

}

}

fn binIndex(size: usize) ?usize {

for (bin_sizes, 0..) |bin_size, i| {

if (size <= bin_size) {

return i;

}

}

return null;

}

The requested alignment ptr_align is calculated as \(\text{ptr_align} = 2^{\text{log2_align}}\). Since each bin’s chunks are guaranteed to be aligned to the bin’s size, if the requested alignment is smaller than the bin size, simply obtaining a chunk from that bin is sufficient. If the requested alignment is larger than the requested memory size, treat the alignment as the bin size. Once the bin is determined, call the previously defined allocFromBin() to allocate memory. If no suitable bin is found, delegate the allocation to the page allocator.

free

The free() function is called to deallocate memory. Its implementation mirrors that of allocate(). The free() function receives the size of the memory region to be freed as a slice. Therefore, there is no need to store which bin the memory belongs to (i.e., its size). This characteristic eliminates the need to store metadata at the start of an in-use chunk, unlike well-known heap implementations such as glibc:

fn free(ctx: *anyopaque, slice: []u8, log2_align: u8, _: usize) void {

const self: *Self = @alignCast(@ptrCast(ctx));

const ptr_align = @as(usize, 1) << @as(Allocator.Log2Align, @intCast(log2_align));

const bin_index = binIndex(@max(ptr_align, slice.len));

if (bin_index) |index| {

self.freeToBin(index, @ptrCast(slice.ptr));

} else {

self.page_allocator.free(slice);

}

}

resize

Similar to PageAllocator, BinAllocator does not support resizing allocations:

fn resize(_: *anyopaque, _: []u8, _: u8, _: usize, _: usize) bool {

@panic("BinAllocator does not support resizing");

}

Summary

Instantiate BinAllocator and make it ready for use:

pub fn newUninit() Self {

return Self{

.page_allocator = undefined,

.list_heads = undefined,

};

}

pub const general_allocator = Allocator{

.ptr = &bin_allocator_instance,

.vtable = &BinAllocator.vtable,

};

const BinAllocator = @import("mem/BinAllocator.zig");

var bin_allocator_instance = BinAllocator.newUninit();

pub fn initGeneralAllocator() void {

bin_allocator_instance.init(page_allocator);

}

bin_allocator_instance is an instance of BinAllocator. This instance itself is not exposed to other files. To use this allocator, access must always go through the Allocator interface.

Let's initialize the allocator in kernelMain():

ymir.mem.initGeneralAllocator();

log.info("Initialized general allocator.", .{});

Finally, let's try allocating memory using the freshly created allocator:

const p = try ymir.mem.general_allocator.alloc(u8, 0x4);

const q = try ymir.mem.general_allocator.alloc(u8, 0x4);

log.debug("p @ {X:0>16}", .{@intFromPtr(p.ptr)});

log.debug("q @ {X:0>16}", .{@intFromPtr(q.ptr)});

The result would look like:

[INFO ] main | Initialized general allocator.

[DEBUG] main | p @ FFFF888000008000

[DEBUG] main | q @ FFFF888000008020

Pointers p and q each refer to a memory region of 0x20 bytes. Since a 0x20 byte region is allocated from the 0x20-bin, you can see that p and q are adjacent to each other. Additionally, because the address map was reconstructed in the previous chapter, the virtual addresses used here are from the direct map region.

After searching, it seems that this name is not commonly used.