VM Entry and VM Exit

In the previous chapter, we successfully configured the VMCS and launched the guest using VMLAUNCH. However, we had not implemented much of the save and restore logic for the host and guest states before VM entry and after VM exit. This means that the guest and host were sharing register states and other context. In this chapter, we will implement proper handling of VM entry and VM exit, including saving and restoring states correctly and handling VM exits appropriately.

important

The source code for this chapter is in whiz-vmm-vmentry_vmexit branch.

Table of Contents

- Tracking Guest State

- VMLAUNCH and VMRESUME

- Error Handling

- VM Entry

- VM Exit

- Exit Handler

- Summary

- References

Tracking Guest State

The guest state is managed by Vcpu structure. For now, let's focus on saving the guest's general-purpose registers. We'll define a list of the general-purpose registers that need to be saved:

pub const GuestRegisters = extern struct {

rax: u64,

rcx: u64,

rdx: u64,

rbx: u64,

rbp: u64,

rsi: u64,

rdi: u64,

r8: u64,

r9: u64,

r10: u64,

r11: u64,

r12: u64,

r13: u64,

r14: u64,

r15: u64,

// Align to 16 bytes, otherwise movaps would cause #GP.

xmm0: u128 align(16),

xmm1: u128 align(16),

xmm2: u128 align(16),

xmm3: u128 align(16),

xmm4: u128 align(16),

xmm5: u128 align(16),

xmm6: u128 align(16),

xmm7: u128 align(16),

};

We'll preserve the integer general-purpose registers and eight XMM registers. Ideally, all supported floating-point registers, including those from AVX and AVX-512, should be saved. However, doing so properly requires the use of XSAVE instruction. To keep things simple, Ymir deliberately does not support floating-point registers newer than SSE2 for the guest1.

We'll add variables to the Vcpu structure to hold the guest's state:

pub const Vcpu = struct {

...

guest_regs: vmx.GuestRegisters = undefined,

...

};

VMLAUNCH and VMRESUME

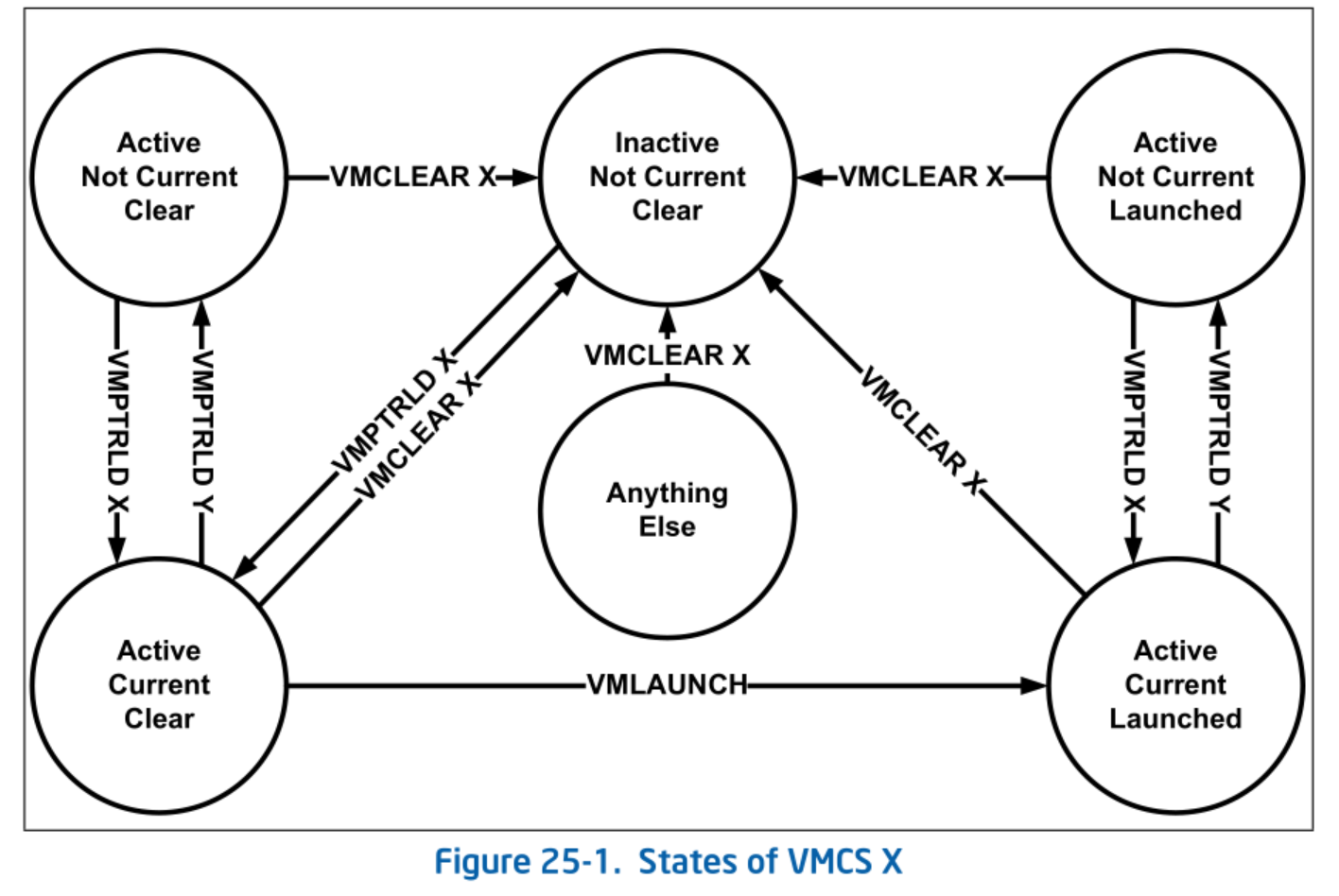

There are two instructions used to perform VM entry: VMLAUNCH and VMRESUME. These are used depending on the current state of the VMCS. As described in the Basics of VMCS chapter, the VMCS goes through the following state transitions:

State of VMCS X. SDM Vol.3C 25.1 Figure 25-1.

State of VMCS X. SDM Vol.3C 25.1 Figure 25-1.

The focus here is on the Clear and Launched states. When VMLAUNCH is executed for a VMCS that is currently set to CPU’s Current VMCS, its state transitions to Launched. To perform another VM entry with a VMCS in the Launched state, you must use VMRESUME. In other words, VMLAUNCH should be used for the first VM entry, and VMRESUME should be used for all subsequent entries. If you use VMLAUNCH and VMRESUME incorrectly, a VMX Instruction Error will occur.

There's no way to obtain the current state of a VMCS. Therefore, VMM has to track the state. Let's add the variable to save the VMCS state:

pub const Vcpu = struct {

...

/// The first VM-entry has been done.

launch_done: bool = false,

...

};

Error Handling

First, we implement the non-assembly part of VM Entry. There are two types of failure for VM Entry:

- VM Entry itself fails (VMX Instruction Error)

- As with other VMX extension instructions that fail, it returns a VMX Instruction Error.

- Execution resumes right after the

VMLAUNCHorVMRESUMEinstruction inloop()function.

- VM Entry succeeds immediately followed by VM Exit

VMLAUNCHorVMRESUMEitself succeeds, but VM Entry fails.- When a VM Exit occurs, execution transfers to the RIP specified in the VMCS Host-State area.

Let's see the former case first:

pub fn loop(self: *Self) VmxError!void {

while (true) {

// Enter VMX non-root operation.

self.vmentry() catch |err| {

log.err("VM-entry failed: {?}", .{err});

if (err == VmxError.VmxStatusAvailable) {

const inst_err = try vmx.InstructionError.load();

log.err("VM Instruction error: {?}", .{inst_err});

}

self.abort();

};

...

}

}

self.vmentry() is a function that executes the VMENTRY assembly routine, which will be described later. It returns VmxError!void, so if an error occurs, it should be caught using catch. VMX instructions can produce two types of errors:

VmxStatusUnavailable: Error without an error codeVmxStatusAvailable: Error with an error code available

If an error code is available, we retrieve the VMX Instruction Error from the VMCS and display it. As long as the VMCS is configured correctly, VMX instructions should not fail. Therefore, in Ymir, a failure during VM entry is considered unrecoverable, and we call self.abort() to terminate execution:

pub fn abort(self: *Self) noreturn {

@setCold(true);

self.dump() catch log.err("Failed to dump VM information.", .{});

ymir.endlessHalt();

}

pub fn dump(self: *Self) VmxError!void {

try self.printGuestState();

}

fn printGuestState(self: *Self) VmxError!void {

log.err("=== vCPU Information ===", .{});

log.err("[Guest State]", .{});

log.err("RIP: 0x{X:0>16}", .{try vmread(vmcs.guest.rip)});

log.err("RSP: 0x{X:0>16}", .{try vmread(vmcs.guest.rsp)});

log.err("RAX: 0x{X:0>16}", .{self.guest_regs.rax});

log.err("RBX: 0x{X:0>16}", .{self.guest_regs.rbx});

log.err("RCX: 0x{X:0>16}", .{self.guest_regs.rcx});

log.err("RDX: 0x{X:0>16}", .{self.guest_regs.rdx});

log.err("RSI: 0x{X:0>16}", .{self.guest_regs.rsi});

log.err("RDI: 0x{X:0>16}", .{self.guest_regs.rdi});

log.err("RBP: 0x{X:0>16}", .{self.guest_regs.rbp});

log.err("R8 : 0x{X:0>16}", .{self.guest_regs.r8});

log.err("R9 : 0x{X:0>16}", .{self.guest_regs.r9});

log.err("R10: 0x{X:0>16}", .{self.guest_regs.r10});

log.err("R11: 0x{X:0>16}", .{self.guest_regs.r11});

log.err("R12: 0x{X:0>16}", .{self.guest_regs.r12});

log.err("R13: 0x{X:0>16}", .{self.guest_regs.r13});

log.err("R14: 0x{X:0>16}", .{self.guest_regs.r14});

log.err("R15: 0x{X:0>16}", .{self.guest_regs.r15});

log.err("CR0: 0x{X:0>16}", .{try vmread(vmcs.guest.cr0)});

log.err("CR3: 0x{X:0>16}", .{try vmread(vmcs.guest.cr3)});

log.err("CR4: 0x{X:0>16}", .{try vmread(vmcs.guest.cr4)});

log.err("EFER:0x{X:0>16}", .{try vmread(vmcs.guest.efer)});

log.err(

"CS : 0x{X:0>4} 0x{X:0>16} 0x{X:0>8}",

.{

try vmread(vmcs.guest.cs_sel),

try vmread(vmcs.guest.cs_base),

try vmread(vmcs.guest.cs_limit),

},

);

}

Since dump() and abort() might be called separately (for example, when you want to dump the guest state for debugging and then continue), they are implemented as separate functions. When aborting, the guest state is output, and Ymir enters an infinite HLT loop.

VM Entry

Call Site

The previously mentioned vmentry() function wraps the assembly instructions VMLAUNCH and VMRESUME:

fn vmentry(self: *Self) VmxError!void {

const success = asm volatile (

\\mov %[self], %%rdi

\\call asmVmEntry

: [ret] "={ax}" (-> u8),

: [self] "r" (self),

: "rax", "rcx", "rdx", "rsi", "rdi", "r8", "r9", "r10", "r11"

) == 0;

if (!self.launch_done and success) {

self.launch_done = true;

}

if (!success) {

const inst_err = try vmread(vmcs.ro.vminstruction_error);

return if (inst_err != 0) VmxError.VmxStatusAvailable else VmxError.VmxStatusUnavailable;

}

}

The pure assembly part is further separated into asmVmEntry(). This function takes a *Vcpu pointer as an argument. Details of asmVmEntry() will be explained later, but this argument is used for saving the host state and restoring the guest state.

There are several ways to implement VM Entry and Exit. Simply put, once you perform a VM Entry, control transfers to the guest, and when returning to the VMM, it jumps to the VM Exit handler. The function that called VM Entry (vmentry()) does not return in the usual sense. However, Ymir implements this so that the control flow looks like a normal function call to make it easier to understand. The flow goes like this: vmentry() → Guest → VM Exit Handler → vmentry(). The details of how this is achieved will be explained during the implementation of the VM Entry and VM Exit handlers.

Now that we understand asmVmEntry() returns like a normal function call, it returns 0 on successful VM Entry and 1 on failure. When VM Entry succeeds and it's the first VM Entry, it sets launch_done to true. This ensures that subsequent calls to asmVmEntry() will execute VMRESUME instead. If VM Entry fails, it checks for a VMX Instruction Error and returns the appropriate error.

Saving Host State

Let's implement asmVmEntry(), which is called from vmentry(). While vmentry() is a Zig function that can return an Error Union (with calling conventions dependent on the Zig compiler), this function will be written entirely in raw assembly, so we use the .Naked calling convention.

First, we save the callee-saved registers of x642. Although RSP is also a callee-saved register, it will be saved separately later:

export fn asmVmEntry() callconv(.Naked) u8 {

// Save callee saved registers.

asm volatile (

\\push %%rbp

\\push %%r15

\\push %%r14

\\push %%r13

\\push %%r12

\\push %%rbx

);

...

}

Next, we push the address of the .guest_regs field from the *Vcpu argument onto the stack via RBX. Since asmVmEntry() uses the .Naked calling convention and cannot accept arguments directly, vmentry() explicitly places the argument in RDI before the call. The offset of .guest_regs within Vcpu is computed using @offsetOf() and std.fmt.comptimePrint(). Because comptimePrint() generates a compile-time evaluated string, it can be passed as an argument to asm volatile(). Calculating the offset this way means if the offset of .guest_regs changes, no code modification is necessary:

// Save a pointer to guest registers

asm volatile (std.fmt.comptimePrint(

\\lea {d}(%%rdi), %%rbx

\\push %%rbx

,

.{@offsetOf(Vcpu, "guest_regs")},

));

Next, we save the remaining callee-saved register, RSP. RSP is special because it must be saved in the VMCS Host-State area. Since calling VMWRITE directly from assembly is cumbersome, we implement a function with the C calling convention here:

export fn setHostStack(rsp: u64) callconv(.C) void {

vmwrite(vmcs.host.rsp, rsp) catch {};

}

We call this function in asmVmEntry() as follows:

// Set host stack

asm volatile (

\\push %%rdi

\\lea 8(%%rsp), %%rdi

\\call setHostStack

\\pop %%rdi

);

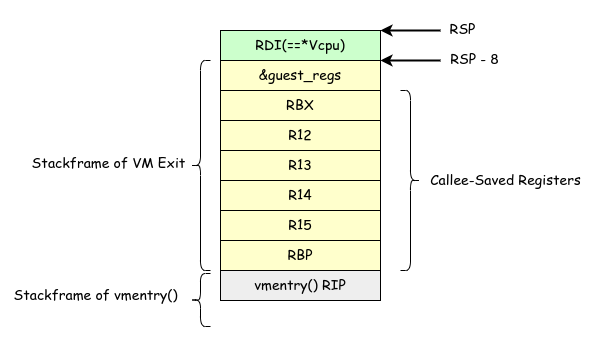

The stack layout just before calling setHostStack() looks like the diagram below. VM Exit starts with the yellow area on the stack as shown. Therefore, the RSP saved in the VMCS Host-State must point to this yellow region. Since RSP cannot be set directly with a MOV instruction, it is set indirectly using PUSH and LEA. Because PUSH is involved, the argument passed to setHostStack() is specified as +8(RSP).

Stack Layout Before VM Entry & After VM Exit

Stack Layout Before VM Entry & After VM Exit

After setting the stack in the VMCS, we POP the previously pushed RDI to restore it. At this point, RDI still holds the *Vcpu pointer. Another field we need from *Vcpu is .launch_done, which determines whether to use VMLAUNCH or VMRESUME. Let's load this value now. The result is stored in RDI. If .launch_done is true, the RFLAGS.ZF flag will be set to 1:

// Determine VMLAUNCH or VMRESUME.

asm volatile (std.fmt.comptimePrint(

\\testb $1, {d}(%%rdi)

,

.{@offsetOf(Vcpu, "launch_done")},

));

Restoring Guest State

With this, the host state has been saved onto the stack. Next, we restore the guest state. We retrieve the guest registers from .guest_regs and set them in order. Since RAX will hold the address of .guest_regs, we set RAX last:

// Restore guest registers.

asm volatile (std.fmt.comptimePrint(

\\lea {[guest_regs]}(%%rdi), %%rax

\\mov {[rcx]}(%%rax), %%rcx

\\mov {[rdx]}(%%rax), %%rdx

\\mov {[rbx]}(%%rax), %%rbx

\\mov {[rsi]}(%%rax), %%rsi

\\mov {[rdi]}(%%rax), %%rdi

\\mov {[rbp]}(%%rax), %%rbp

\\mov {[r8]}(%%rax), %%r8

\\mov {[r9]}(%%rax), %%r9

\\mov {[r10]}(%%rax), %%r10

\\mov {[r11]}(%%rax), %%r11

\\mov {[r12]}(%%rax), %%r12

\\mov {[r13]}(%%rax), %%r13

\\mov {[r14]}(%%rax), %%r14

\\mov {[r15]}(%%rax), %%r15

\\movaps {[xmm0]}(%%rax), %%xmm0

\\movaps {[xmm1]}(%%rax), %%xmm1

\\movaps {[xmm2]}(%%rax), %%xmm2

\\movaps {[xmm3]}(%%rax), %%xmm3

\\movaps {[xmm4]}(%%rax), %%xmm4

\\movaps {[xmm5]}(%%rax), %%xmm5

\\movaps {[xmm6]}(%%rax), %%xmm6

\\movaps {[xmm7]}(%%rax), %%xmm7

\\mov {[rax]}(%%rax), %%rax

, .{

.guest_regs = @offsetOf(Vcpu, "guest_regs"),

.rax = @offsetOf(vmx.GuestRegisters, "rax"),

.rcx = @offsetOf(vmx.GuestRegisters, "rcx"),

.rdx = @offsetOf(vmx.GuestRegisters, "rdx"),

.rbx = @offsetOf(vmx.GuestRegisters, "rbx"),

.rsi = @offsetOf(vmx.GuestRegisters, "rsi"),

.rdi = @offsetOf(vmx.GuestRegisters, "rdi"),

.rbp = @offsetOf(vmx.GuestRegisters, "rbp"),

.r8 = @offsetOf(vmx.GuestRegisters, "r8"),

.r9 = @offsetOf(vmx.GuestRegisters, "r9"),

.r10 = @offsetOf(vmx.GuestRegisters, "r10"),

.r11 = @offsetOf(vmx.GuestRegisters, "r11"),

.r12 = @offsetOf(vmx.GuestRegisters, "r12"),

.r13 = @offsetOf(vmx.GuestRegisters, "r13"),

.r14 = @offsetOf(vmx.GuestRegisters, "r14"),

.r15 = @offsetOf(vmx.GuestRegisters, "r15"),

.xmm0 = @offsetOf(vmx.GuestRegisters, "xmm0"),

.xmm1 = @offsetOf(vmx.GuestRegisters, "xmm1"),

.xmm2 = @offsetOf(vmx.GuestRegisters, "xmm2"),

.xmm3 = @offsetOf(vmx.GuestRegisters, "xmm3"),

.xmm4 = @offsetOf(vmx.GuestRegisters, "xmm4"),

.xmm5 = @offsetOf(vmx.GuestRegisters, "xmm5"),

.xmm6 = @offsetOf(vmx.GuestRegisters, "xmm6"),

.xmm7 = @offsetOf(vmx.GuestRegisters, "xmm7"),

}));

With the host state saved and the guest state restored, it's finally time to execute a VM Entry. At this point, the RFLAGS.ZF flag indicates whether VMLAUNCH or VMRESUME should be executed. We call the appropriate instruction based on this flag:

// VMLAUNCH or VMRESUME.

asm volatile (

\\jz .L_vmlaunch

\\vmresume

\\.L_vmlaunch:

\\vmlaunch

);

If a VM Entry succeeds, execution transfers to the guest, so the instructions that follow will not be executed. If the VMX instruction fails, the subsequent instructions will run. Therefore, error handling is implemented immediately after:

// Set return value to 1.

asm volatile (

\\mov $1, %%al

);

// Restore callee saved registers.

asm volatile (

\\add $0x8, %%rsp

\\pop %%rbx

\\pop %%r12

\\pop %%r13

\\pop %%r14

\\pop %%r15

\\pop %%rbp

);

// Return to caller of asmVmEntry()

asm volatile (

\\ret

);

Since asmVmEntry() uses a custom calling convention where it returns 0 on success and 1 on failure, here we set the return value to 1. As shown in the earlier diagram, at this point the stack contains &.guest_regs and the callee-saved registers. We simply POP to discard the former, then restore the latter into their respective registers.

VM Exit

When the guest triggers a VM Exit for any reason, execution transfers to the RIP set in the VMCS Host-State. Ymir sets the address of asmVmExit() to the field, so this function handles the return to the host. At the point of VM Exit, the stack looks like the yellow area in the previous diagram, with &.guest_regs at the top. Since this is used to save the guest state, we first retrieve it:

pub fn asmVmExit() callconv(.Naked) void {

// Disable IRQ.

asm volatile (

\\cli

);

// Save guest RAX, get &guest_regs

asm volatile (

\\push %%rax

\\movq 8(%%rsp), %%rax

);

...

}

RAX is used as a scratch register. Since we cannot lose the guest's RAX, we push RAX onto the stack before retrieving &.guest_regs.

Next, we save the guest registers into guest_regs:

// Save guest registers.

asm volatile (std.fmt.comptimePrint(

\\

// Save pushed RAX.

\\pop {[rax]}(%%rax)

// Discard pushed &guest_regs.

\\add $0x8, %%rsp

// Save guest registers.

\\mov %%rcx, {[rcx]}(%%rax)

\\mov %%rdx, {[rdx]}(%%rax)

\\mov %%rbx, {[rbx]}(%%rax)

\\mov %%rsi, {[rsi]}(%%rax)

\\mov %%rdi, {[rdi]}(%%rax)

\\mov %%rbp, {[rbp]}(%%rax)

\\mov %%r8, {[r8]}(%%rax)

\\mov %%r9, {[r9]}(%%rax)

\\mov %%r10, {[r10]}(%%rax)

\\mov %%r11, {[r11]}(%%rax)

\\mov %%r12, {[r12]}(%%rax)

\\mov %%r13, {[r13]}(%%rax)

\\mov %%r14, {[r14]}(%%rax)

\\mov %%r15, {[r15]}(%%rax)

\\movaps %%xmm0, {[xmm0]}(%%rax)

\\movaps %%xmm1, {[xmm1]}(%%rax)

\\movaps %%xmm2, {[xmm2]}(%%rax)

\\movaps %%xmm3, {[xmm3]}(%%rax)

\\movaps %%xmm4, {[xmm4]}(%%rax)

\\movaps %%xmm5, {[xmm5]}(%%rax)

\\movaps %%xmm6, {[xmm6]}(%%rax)

\\movaps %%xmm7, {[xmm7]}(%%rax)

,

.{

.rax = @offsetOf(vmx.GuestRegisters, "rax"),

.rcx = @offsetOf(vmx.GuestRegisters, "rcx"),

.rdx = @offsetOf(vmx.GuestRegisters, "rdx"),

.rbx = @offsetOf(vmx.GuestRegisters, "rbx"),

.rsi = @offsetOf(vmx.GuestRegisters, "rsi"),

.rdi = @offsetOf(vmx.GuestRegisters, "rdi"),

.rbp = @offsetOf(vmx.GuestRegisters, "rbp"),

.r8 = @offsetOf(vmx.GuestRegisters, "r8"),

.r9 = @offsetOf(vmx.GuestRegisters, "r9"),

.r10 = @offsetOf(vmx.GuestRegisters, "r10"),

.r11 = @offsetOf(vmx.GuestRegisters, "r11"),

.r12 = @offsetOf(vmx.GuestRegisters, "r12"),

.r13 = @offsetOf(vmx.GuestRegisters, "r13"),

.r14 = @offsetOf(vmx.GuestRegisters, "r14"),

.r15 = @offsetOf(vmx.GuestRegisters, "r15"),

.xmm0 = @offsetOf(vmx.GuestRegisters, "xmm0"),

.xmm1 = @offsetOf(vmx.GuestRegisters, "xmm1"),

.xmm2 = @offsetOf(vmx.GuestRegisters, "xmm2"),

.xmm3 = @offsetOf(vmx.GuestRegisters, "xmm3"),

.xmm4 = @offsetOf(vmx.GuestRegisters, "xmm4"),

.xmm5 = @offsetOf(vmx.GuestRegisters, "xmm5"),

.xmm6 = @offsetOf(vmx.GuestRegisters, "xmm6"),

.xmm7 = @offsetOf(vmx.GuestRegisters, "xmm7"),

},

));

ゲストの状態を保存したら、スタックに積んでいたホストの callee-saved レジスタを復元します。

// Restore callee saved registers.

asm volatile (

\\pop %%rbx

\\pop %%r12

\\pop %%r13

\\pop %%r14

\\pop %%r15

\\pop %%rbp

);

At this point, the top of the stack holds the RIP pushed by vmentry() during the CALL. Therefore, executing RET here returns control to vmentry(). The caller can continue as if asmVmEntry() had returned normally from a function call:

// Return to caller of asmVmEntry()

asm volatile (

\\mov $0, %%rax

\\ret

);

Exit Handler

With this, the VM Entry and VM Exit sequence completes, allowing control to return from vmentry() to loop(). From here, appropriate handling is performed depending on the cause of the VM Exit. Let's define the VM Exit handler function:

fn handleExit(self: *Self, exit_info: vmx.ExitInfo) VmxError!void {

switch (exit_info.basic_reason) {

.hlt => {

try self.stepNextInst();

log.debug("HLT", .{});

},

else => {

log.err("Unhandled VM-exit: reason={?}", .{exit_info.basic_reason});

self.abort();

},

}

}

fn stepNextInst(_: *Self) VmxError!void {

const rip = try vmread(vmcs.guest.rip);

try vmwrite(vmcs.guest.rip, rip + try vmread(vmcs.ro.exit_inst_len));

}

The handler takes an ExitInfo struct as an argument. Since this struct contains the general cause of the VM Exit, we use a switch statement to handle different causes. For now, we only implement handling for VM Exit caused by HLT, simply logging that a HLT occurred.

ExitInfo can be obtained from the VMCS VM-Exit Information category's Basic VM-Exit Information field:

pub fn loop(self: *Self) VmxError!void {

while (true) {

...

try self.handleExit(try vmx.ExitInfo.load());

}

}

After calling the exit handler, control returns to the beginning of the while loop, and VM Entry is executed again. This cycle repeats endlessly.

Finally, in setupHostState(), we use vmwrite() to set the entry point for VM Exit:

let vmam = @import("asm.zig");

fn setupHostState(_: *Vcpu) VmxError!void {

...

try vmwrite(vmcs.host.rip, &vmam.asmVmExit);

...

}

Summary

Now, let's run the guest using the implemented VM Entry / VM Exit. Set the .hlt flag in the Primary Processor-based Controls of the VMCS Execution Controls to true, so that a VM Exit occurs on HLT instructions. Running the guest will produce output similar to the following:

[INFO ] main | Entered VMX root operation.

[INFO ] main | Starting the virtual machine...

[DEBUG] vcpu | HLT

[DEBUG] vcpu | HLT

[DEBUG] vcpu | HLT

[DEBUG] vcpu | HLT

[DEBUG] vcpu | HLT

[DEBUG] vcpu | HLT

[DEBUG] vcpu | HLT

...

The output will continuously show HLT. This indicates that the VM Entry / VM Exit loop is functioning correctly. Additionally, for the host to produce output in the VM Exit handler and re-enter VM Entry, the host state must be properly saved and restored. In other words, this confirms that those mechanisms are also working as intended.

If you're curious whether the guest state is correctly saved and restored, try modifying a guest register value like guest_regs.rax = 0xDEADBEEF before entering the while loop in loop(). Then, disable VM Exit on HLT and run the guest. The guest will stop at the HLT loop. Checking the register state via QEMU monitor at that point should show that RAX holds 0xDEADBEEF.

In this chapter, by properly implementing the VM Entry and VM Exit, we enabled repeated VM Entries. Considering that the previous chapter ended with a single VMLAUNCH, this represents a significant advancement. Although this chapter involved writing a lot of assembly code directly, rest assured that from here on, assembly will rarely appear.

References

By "not supported," we do not mean that using these features results in undefined behavior; rather, the system disables these features entirely. Whether these features are available is determined by the CPUID instruction and the XCR0 register, but Ymir can arbitrarily manipulate these values presented to the guest.

You might find it suspicious that even though callee-saved registers are saved, they're are being clobbered in vmentry(). When using inline assembly with CALL, the compiler does not automatically generate code to save and restore caller-saved registers around the call. Therefore, you either need to write assembly to manually save and restore caller-saved registers before and after the CALL, or, as in this case, mark them as clobbered.