Paging

This chapter is the final part of the series where we replace what UEFI provides with Ymir's own implementations. The grand finale is the page tables. The page tables provided by UEFI use a direct mapping where virtual addresses are mapped directly to physical addresses. While Ymir's new mapping will also be a direct map, it will include an offset so that virtual and physical addresses differ. By the end of this chapter, you will be able to discard everything originally provided by UEFI.

important

The source code for this chapter is in whiz-ymir-paging branch.

Table of Contents

- Virtual Memory Layout

- Overview of Memory Map Reconstruction

- Allocating the New Lv4 Table

- Direct Map Region

- Cloning the Old Page Table

- Loading the New Table

- Virt-Phys Translation

- Refactoring Surtr

- Summary

Virtual Memory Layout

As described in the Loading Kernel Chapter, Ymir adopts the following virtual memory layout:

| Description | Virtual Address | Physical Address |

|---|---|---|

| Direct Map Region | 0xFFFF888000000000 - 0xFFFF88FFFFFFFFFF (512GiB) | 0x0 - 0xFFFFFFFFFF |

| Kernel Base | 0xFFFFFFFF80000000 - | 0x0 - |

| Kernel Text | 0xFFFFFFFF80100000 - | 0x100000 - |

Direct Map Region maps the entire physical address space directly. However, this direct mapping does not mean virtual and physical addresses are identical. There is an offset of 0xFFFF888000000000 between virtual and physical addresses. This region also includes the heap used by the allocator.

The Kernel Base and Kernel Text regions are designated areas for loading the kernel image. The linker script ymir/linker.ld instructs the loader to place the kernel image at these addresses. These virtual addresses have already been mapped during the loading kernel phase in Surtr.

As you can see by comparing the physical addresses mapped to each virtual address, the Direct Map Region and Kernel Base overlap. It is perfectly normal for the same physical address to be mapped to multiple virtual addresses. Since software primarily works with virtual addresses, mapping different virtual addresses helps make it clear which region an address belongs to. For example, seeing an address like 0xFFFFFFFF8010DEAD immediately indicates that it belongs to Ymir’s code region, which is quite convenient.

Of course, it’s also easy to adopt other layouts. The simplest approach is to map all virtual addresses 1:1 to physical addresses without any offset. For Ymir, as implemented in this series, there are no downsides to doing so. Feel free to choose whichever layout you prefer.

Overview of Memory Map Reconstruction

From here on, we will refer to the page tables provided by UEFI as the "old page tables" and the ones provided by Ymir as the "new page tables". To replace the old page tables with the new ones, follow these steps:

- Allocate memory for the new Lv4 page table

- Map the direct map region using 1GiB pages

- Clone the mapping of the kernel base region from the old page table

- Load the new page tables

In step 2, map 512 GiB starting from 0xFFFF888000000000, which is the direct map region. This can be done without referencing the old page tables. However, to know where the kernel is mapped, you need to consult the old page tables1. Therefore, this region must be cloned by referring to the old page tables.

Allocating the New Lv4 Table

page.zig we previously implemented in Surtr can be almost direccopied to ymir/arch/x86/page.zig. Specifically, you should copy various constants, the EntryBase struct, and functions like getTable() and getEntry().

A page table consists of 512 entries, each 8 bytes in size. That means its total size is 4 KiB (1 page). Since we allocate one page per page table, let's prepare a helper function to allocate a single page:

fn allocatePage(allocator: Allocator) PageError![*]align(page_size_4k) u8 {

return (allocator.alignedAlloc(

u8,

page_size_4k,

page_size_4k,

) catch return PageError.OutOfMemory).ptr;

}

The Allocator is backed by the PageAllocator we implemented in the previous chapter. However, you can use it as a standard Zig Allocator without needing to be aware that it's a PageAllocator. Since allocated pages require 4 KiB alignment, we use alignedAlloc() to allocate aligned memory regions.

Let's define reconstruct() that reconstructs memory mapping:

pub fn reconstruct(allocator: Allocator) PageError!void {

const lv4tbl_ptr: [*]Lv4Entry = @ptrCast(try allocatePage(allocator));

const lv4tbl = lv4tbl_ptr[0..num_table_entries]; // 512

@memset(lv4tbl, std.mem.zeroes(Lv4Entry));

...

}

First, allocate a new Lv4 page table. The memory returned by allocatePage() is a many-item pointer, so we create a slice with 512 entries per table. We zero-fill the entire page table. Zero-filling ensures that the present field in each entry is set to 0, meaning nothing is mapped yet. For reference, here is the structure of a page table entry:

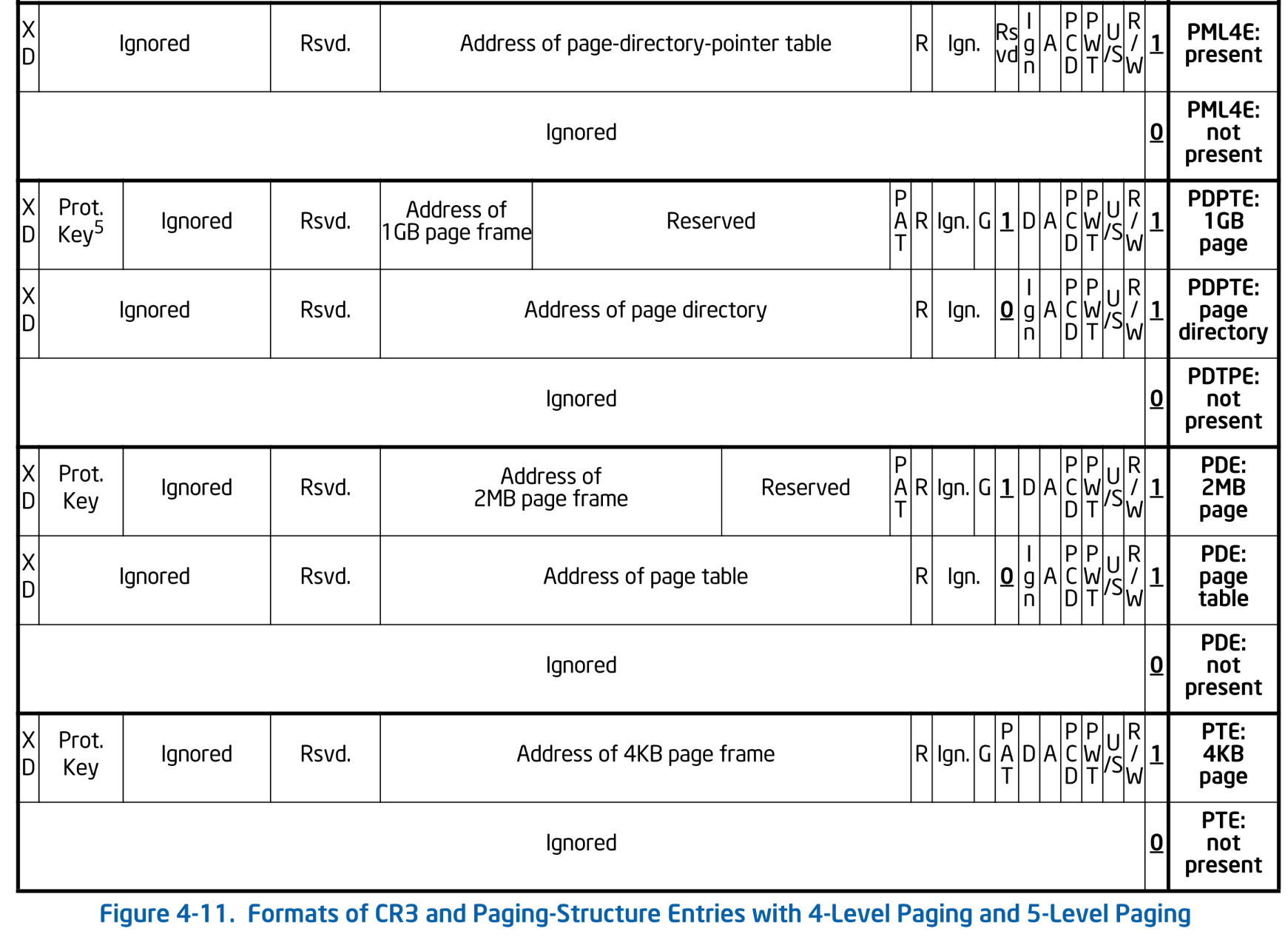

Formats of CR3 and Paging-Structure Entries with 4-Level Paging. SDM Vol.3A 4.5.5

Formats of CR3 and Paging-Structure Entries with 4-Level Paging. SDM Vol.3A 4.5.5

Direct Map Region

Map the direct map region onto the newly created, pristine page table:

const direct_map_base = ymir.direct_map_base;

const direct_map_size = ymir.direct_map_size;

pub fn reconstruct(allocator: Allocator) PageError!void {

...

const lv4idx_start = (direct_map_base >> lv4_shift) & index_mask;

const lv4idx_end = lv4idx_start + (direct_map_size >> lv4_shift);

// Create the direct mapping using 1GiB pages.

for (lv4tbl[lv4idx_start..lv4idx_end], 0..) |*lv4ent, i| {

const lv3tbl: [*]Lv3Entry = @ptrCast(try allocatePage(allocator));

for (0..num_table_entries) |lv3idx| {

lv3tbl[lv3idx] = Lv3Entry.newMapPage(

(i << lv4_shift) + (lv3idx << lv3_shift),

true,

);

}

lv4ent.* = Lv4Entry.newMapTable(lv3tbl, true);

}

...

}

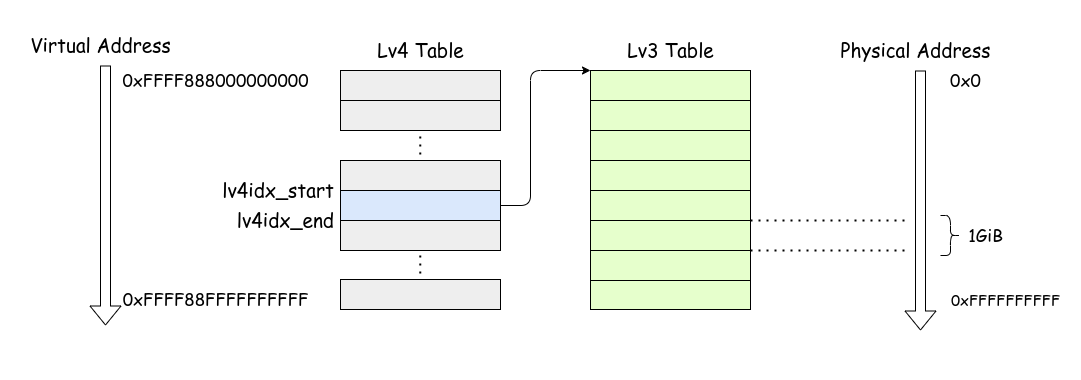

The direct_map_base is 0xFFFF888000000000. The Lv4 entry index to resolve this virtual address is obtained by shifting the address right by lv4_shift (39 bits). lv4idx_end is calculated by adding the number of entries needed to map 512 GiB to lv4idx_start. Since each Lv3 table has 512 entries, each capable of mapping 1 GiB, only one Lv3 table is required.

note

This time, we mapped the direct map region using 1 GiB pages. Using 4 KiB pages to map 1 GiB would require \(2^{18}\) entries. Since each entry is 8 bytes, the total would be \(2^{18} \times 8 = 2^{21} = 2\text{MiB}\). Mapping 512 GiB with 4 KiB pages would mean the page table entries alone would consume 1 GiB. By using larger pages, we can reduce the number of page table entries. This is why we chose to use 1 GiB pages in this case.

In the for loop, we allocate an Lv3 table for each Lv4 entry and create the corresponding entry. Since only one Lv3 table is needed this time, the loop runs just once, but it is designed to handle cases where the direct map region needs to be larger.

This function can be illustrated as follows:

Direct Map Region

Direct Map Region

Cloning the Old Page Table

Next, we clone the region mapped above the direct map region from the old tables. This region is used exclusively by the kernel image.

First, we scan the Lv4 entries to find entries to be cloned:

pub fn reconstruct(allocator: Allocator) PageError!void {

...

// Recursively clone tables for the kernel region.

const old_lv4tbl = getLv4Table(am.readCr3());

for (lv4idx_end..num_table_entries) |lv4idx| {

if (old_lv4tbl[lv4idx].present) {

const lv3tbl = getLv3Table(old_lv4tbl[lv4idx].address());

const new_lv3tbl = try cloneLevel3Table(lv3tbl, allocator);

lv4tbl[lv4idx] = Lv4Entry.newMapTable(new_lv3tbl.ptr, true);

}

}

...

}

The address of the currently used Lv4 table can be obtained from the value in CR3. After retrieving the table using the previously implemented getLv4Table(), we search for valid (present) entries starting from the direct map region onward. Valid Lv4 entries point to Lv3 page tables, but these Lv3 tables are also provided by UEFI and need to be newly allocated.

After allocating the Lv3 page, recursively clone the tables pointed to by valid entries within that table in the same manner:

fn cloneLevel3Table(lv3_table: []Lv3Entry, allocator: Allocator) PageError![]Lv3Entry {

const new_lv3ptr: [*]Lv3Entry = @ptrCast(try allocatePage(allocator));

const new_lv3tbl = new_lv3ptr[0..num_table_entries];

@memcpy(new_lv3tbl, lv3_table);

for (new_lv3tbl) |*lv3ent| {

if (!lv3ent.present) continue;

if (lv3ent.ps) continue;

const lv2tbl = getLv2Table(lv3ent.address());

const new_lv2tbl = try cloneLevel2Table(lv2tbl, allocator);

lv3ent.phys = @truncate(virt2phys(new_lv2tbl.ptr) >> page_shift_4k);

}

return new_lv3tbl;

}

fn cloneLevel2Table(lv2_table: []Lv2Entry, allocator: Allocator) PageError![]Lv2Entry {

const new_lv2ptr: [*]Lv2Entry = @ptrCast(try allocatePage(allocator));

const new_lv2tbl = new_lv2ptr[0..num_table_entries];

@memcpy(new_lv2tbl, lv2_table);

for (new_lv2tbl) |*lv2ent| {

if (!lv2ent.present) continue;

if (lv2ent.ps) continue;

const lv1tbl = getLv1Table(lv2ent.address());

const new_lv1tbl = try cloneLevel1Table(lv1tbl, allocator);

lv2ent.phys = @truncate(virt2phys(new_lv1tbl.ptr) >> page_shift_4k);

}

return new_lv2tbl;

}

The cloning process for Lv3 and Lv2 tables is almost identical. While it would have been possible to unify the internal implementation similar to getLvXTable() functions, since there are only two levels and they are used just once, this approach would be sufficient.

Unlike Lv4 entries, Lv3 and Lv2 page entries can either point to the next level page table (ps == true) or a physical page (present == true). When a page is mapped, there is nothing to create or copy, so those entries are skipped using continue.

Lv1 entries never point to another table; they always map physical pages. Therefore, cloneLevel1Table() simply creates a new table and copies the entries.

fn cloneLevel1Table(lv1_table: []Lv1Entry, allocator: Allocator) PageError![]Lv1Entry {

const new_lv1ptr: [*]Lv1Entry = @ptrCast(try allocatePage(allocator));

const new_lv1tbl = new_lv1ptr[0..num_table_entries];

@memcpy(new_lv1tbl, lv1_table);

return new_lv1tbl;

}

With this, the existing mapping for the kernel region has been copied to the new Lv4 table.

Loading the New Table

Finally, load the new page table. The address of the Lv4 page table can be updated by writing to CR3 register.

pub fn reconstruct(allocator: Allocator) PageError!void {

...

const cr3 = @intFromPtr(lv4tbl) & ~@as(u64, 0xFFF);

am.loadCr3(cr3);

}

Writing to CR3 flushes the TLB entries, invalidating the old entries. From that point onward, the newly set page table will be used for address translation.

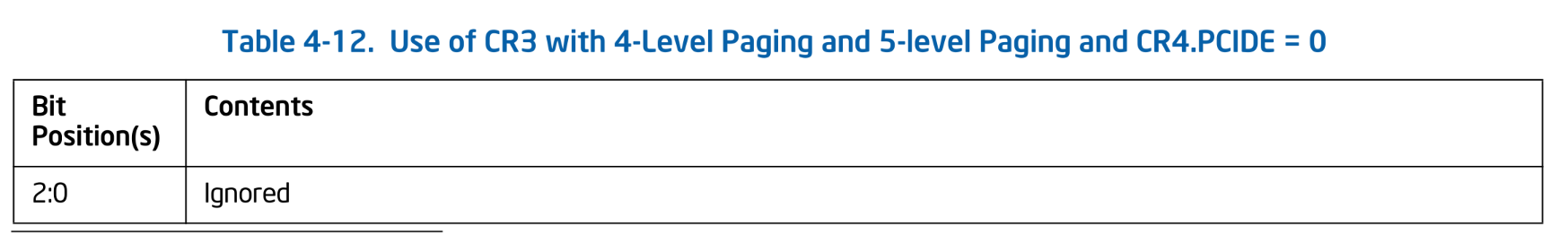

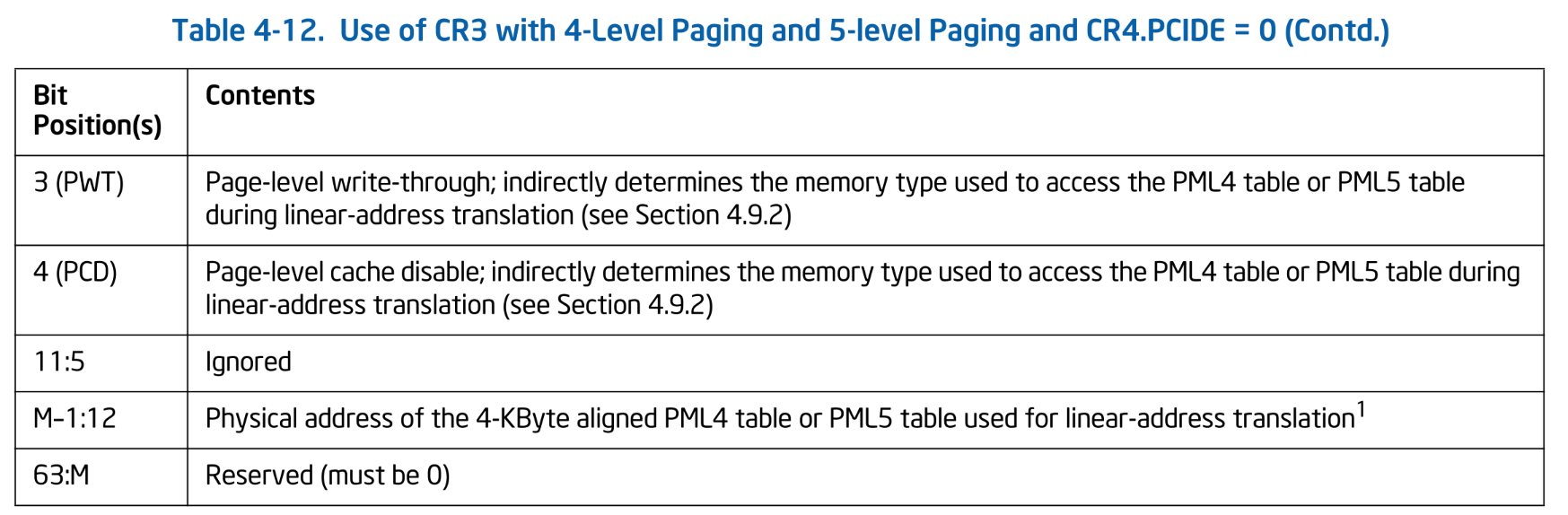

Note that, in addition to simply holding the physical address of the page table, CR3 also contains other fields:

Use of CR3 with 4-Level Paging and 5-level Paging and CR4.PCIDE = 0. SDM Vol.3A 4.5.2

Use of CR3 with 4-Level Paging and 5-level Paging and CR4.PCIDE = 0. SDM Vol.3A 4.5.2  Use of CR3 with 4-Level Paging and 5-level Paging and CR4.PCIDE = 0 (Contd.). SDM Vol.3A 4.5.2

Use of CR3 with 4-Level Paging and 5-level Paging and CR4.PCIDE = 0 (Contd.). SDM Vol.3A 4.5.2

In this series, Ymir does not use PCID: Process Context Identifiers, so it follows this table format2. The M in the table indicates the size of the physical address, which is likely 46 for recent Core series processors. The 3rd and 4th bits affect the cache type when accessing the Lv4 table. We simply set both bits to 0 without further consideration.

Virt-Phys Translation

Let's reconstruct memory map using the implemented functions:

var mapping_reconstructed = false;

pub fn reconstructMapping(allocator: Allocator) !void {

try arch.page.reconstruct(allocator);

mapping_reconstructed = true;

...

}

By the way, in the Page Allocator chapter, we defined a function to convert between virtual and physical addresses. At that time, we were still using the UEFI page table, so physical and virtual addresses were treated as identical. However, after reconstruct() is called, the page table is replaced with a new one, and the virtual-to-physical address mapping changes accordingly. For example, the virtual address 0x1000 used to be mapped to the physical address 0x1000, but in the new page table, it is no longer mapped. After rebuilding the table, the behavior of the translation function needs to be updated:

pub fn virt2phys(addr: u64) Phys {

return if (!mapping_reconstructed) b: {

// UEFI's page table.

break :b addr;

} else if (addr < ymir.kernel_base) b: {

// Direct map region.

break :b addr - ymir.direct_map_base;

} else b: {

// Kernel image mapping region.

break :b addr - ymir.kernel_base;

};

}

pub fn phys2virt(addr: u64) Virt {

return if (!mapping_reconstructed) b: {

// UEFI's page table.

break :b addr;

} else b: {

// Direct map region.

break :b addr + ymir.direct_map_base;

};

}

We use mapping_reconstructed to track whether the page table has been rebuilt, and based on its value, we adjust the conversion logic. For virtual-to-physical translation, we need to determine whether the virtual address belongs to the direct map region or the kernel image region. On the other hand, for physical-to-virtual translation, we always return the virtual address in the direct map region. Although physical addresses in the kernel image area are mapped to two virtual addresses, including the kernel region address, we keep it simple here by only returning the direct map virtual address.

Now, the page table reconstruction is complete. It is called from kernelMain():

log.info("Reconstructing memory mapping...", .{});

try mem.reconstructMapping(mem.page_allocator);

When running Ymir and attaching GDB after entering the HALT loop, the memory map appears as follows:

Virtual address start-end Physical address start-end Total size Page size Count Flags

0xffff888000000000-0xffff890000000000 0x0000000000000000-0x0000008000000000 0x8000000000 0x40000000 512 [RWX KERN ACCESSED GLOBAL]

0xffffffff80100000-0xffffffff80517000 0x0000000000100000-0x0000000000517000 0x417000 0x1000 1047 [RWX KERN ACCESSED DIRTY GLOBAL]

You can see that both the direct map region and the kernel image region are mapped. The map reconstruction is complete.

Refactoring Surtr

info

You can skip this section.

I noticed something when looking at the new address map. Despite setting up segment layouts properly in linker.ld, the kernel image area ended up as a single RWX region. This happened because Surtr ignored the segment attributes when loading the kernel in the Loading Kernel chapter. As a result, all segments were mapped with the same RWX permissions, making them appear as one continuous region. The stack guard page we carefully created is completely ineffective under this condition.

With that said, we will modify Surtr's kernel loader to respect the segment attributes. First, let's define a function to change the attributes of a 4KiB page:

pub const PageAttribute = enum {

read_only,

read_write,

executable,

pub fn fromFlags(flags: u32) PageAttribute {

return if (flags & elf.PF_X != 0) .executable else if (flags & elf.PF_W != 0) .read_write else .read_only;

}

};

pub fn changeMap4k(virt: Virt, attr: PageAttribute) PageError!void {

const rw = switch (attr) {

.read_only, .executable => false,

.read_write => true,

};

const lv4ent = getLv4Entry(virt, am.readCr3());

if (!lv4ent.present) return PageError.NotPresent;

const lv3ent = getLv3Entry(virt, lv4ent.address());

if (!lv3ent.present) return PageError.NotPresent;

const lv2ent = getLv2Entry(virt, lv3ent.address());

if (!lv2ent.present) return PageError.NotPresent;

const lv1ent = getLv1Entry(virt, lv2ent.address());

if (!lv1ent.present) return PageError.NotPresent;

lv1ent.rw = rw;

am.flushTlbSingle(virt);

}

This function implicitly assumes that the specified virtual address is mapped to a 4KiB physical page. If a 1GiB or 2MiB page happens to be mapped in the middle of translation, it would mistakenly treat those entries as page tables, leading to critical bugs. However, since Surtr uses only 4KiB pages when loading the kernel, this assumption holds. If it encounters a non-present (present == false) page entry along the way, the function gracefully returns an error.

The helper function to flush a specific TLB entry uses INVLPG instruction:

pub inline fn flushTlbSingle(virt: u64) void {

asm volatile (

\\invlpg (%[virt])

:

: [virt] "r" (virt),

: "memory"

);

}

Next, we update the kernel loading logic:

// セグメントごとにカーネルをロードするところ

while (true) {

const phdr = iter.next() ...;

...

// Change memory protection.

const page_start = phdr.p_vaddr & ~page_mask;

const page_end = (phdr.p_vaddr + phdr.p_memsz + (page_size - 1)) & ~page_mask;

const size = (page_end - page_start) / page_size;

const attribute = arch.page.PageAttribute.fromFlags(phdr.p_flags);

for (0..size) |i| {

arch.page.changeMap4k(

page_start + page_size * i,

attribute,

) catch |err| {

log.err("Failed to change memory protection: {?}", .{err});

return .LoadError;

};

}

}

At the end of the while loop that reads each segment header and loads the kernel, we add logic to update the attributes of the pages where the segment was loaded. Note that in this series, we are ignoring the execution-disable attribute of segments. If you want to support it, you can add an xd field to the end of the page.EntryBase struct. When this field is set to true, the page becomes NX (non-executable). The original Ymir supports NX pages, so feel free to refer to it if you're interested.

With that, Ymir is now able to load segments with proper attributes. The current memory map looks like this:

Virtual address start-end Physical address start-end Total size Page size Count Flags

0xffff888000000000-0xffff890000000000 0x0000000000000000-0x0000008000000000 0x8000000000 0x40000000 512 [RWX KERN ACCESSED GLOBAL]

0xffffffff80100000-0xffffffff8013a000 0x0000000000100000-0x000000000013a000 0x3a000 0x1000 58 [R-X KERN ACCESSED DIRTY GLOBAL]

0xffffffff8013a000-0xffffffff8013f000 0x000000000013a000-0x000000000013f000 0x5000 0x1000 5 [R-- KERN ACCESSED DIRTY GLOBAL]

0xffffffff8013f000-0xffffffff80542000 0x000000000013f000-0x0000000000542000 0x403000 0x1000 1027 [RW- KERN ACCESSED DIRTY GLOBAL]

0xffffffff80542000-0xffffffff80543000 0x0000000000542000-0x0000000000543000 0x1000 0x1000 1 [R-- KERN ACCESSED DIRTY GLOBAL]

0xffffffff80543000-0xffffffff80548000 0x0000000000543000-0x0000000000548000 0x5000 0x1000 5 [RW- KERN ACCESSED DIRTY GLOBAL]

0xffffffff80548000-0xffffffff8054a000 0x0000000000548000-0x000000000054a000 0x2000 0x1000 2 [R-- KERN ACCESSED DIRTY GLOBAL]

The direct map region remains uniformly RWX as before, but you can see that the kernel region is now mapped according to each segment's attributes. This matches the segment information visible with readelf:

> readelf --segment --sections ./zig-out/bin/ymir.elf

Section Headers:

[Nr] Name Type Address Offset

Size EntSize Flags Link Info Align

[ 0] NULL 0000000000000000 00000000

0000000000000000 0000000000000000 0 0 0

[ 1] .text PROGBITS ffffffff80100000 00001000

00000000000093fc 0000000000000000 AXl 0 0 16

[ 2] .ltext.unlikely. PROGBITS ffffffff80109400 0000a400

00000000000025a4 0000000000000000 AXl 0 0 16

[ 3] .ltext.memcpy PROGBITS ffffffff8010b9b0 0000c9b0

00000000000000d9 0000000000000000 AXl 0 0 16

[ 4] .ltext.memset PROGBITS ffffffff8010ba90 0000ca90

00000000000000ce 0000000000000000 AXl 0 0 16

[ 5] .rodata PROGBITS ffffffff8010c000 0000d000

00000000000000d0 0000000000000000 A 0 0 8

[ 6] .rodata.cst16 PROGBITS ffffffff8010c0d0 0000d0d0

0000000000000060 0000000000000010 AM 0 0 16

[ 7] .rodata.cst4 PROGBITS ffffffff8010c130 0000d130

0000000000000014 0000000000000004 AM 0 0 4

[ 8] .rodata.str1.1 PROGBITS ffffffff8010c144 0000d144

00000000000002b5 0000000000000001 AMSl 0 0 1

[ 9] .lrodata PROGBITS ffffffff8010c400 0000d400

000000000000007b 0000000000000000 Al 0 0 8

[10] .data PROGBITS ffffffff8010d000 0000e000

0000000000400060 0000000000000000 WAl 0 0 16

[11] .bss NOBITS ffffffff8050e000 0040f000

0000000000002000 0000000000000000 WAl 0 0 4096

[12] __stackguard[...] NOBITS ffffffff80510000 0040f000

0000000000001000 0000000000000000 WA 0 0 1

[13] __stack NOBITS ffffffff80511000 0040f000

0000000000005000 0000000000000000 WA 0 0 1

[14] __stackguard[...] NOBITS ffffffff80516000 0040f000

0000000000001000 0000000000000000 WA 0 0 1

[15] .debug_loc PROGBITS 0000000000000000 0040f000

0000000000072c0d 0000000000000000 0 0 1

[16] .debug_abbrev PROGBITS 0000000000000000 00481c0d

000000000000087d 0000000000000000 0 0 1

[17] .debug_info PROGBITS 0000000000000000 0048248a

00000000000359c9 0000000000000000 0 0 1

[18] .debug_ranges PROGBITS 0000000000000000 004b7e53

000000000000f8c0 0000000000000000 0 0 1

[19] .debug_str PROGBITS 0000000000000000 004c7713

000000000000d940 0000000000000001 MS 0 0 1

[20] .debug_pubnames PROGBITS 0000000000000000 004d5053

0000000000005c03 0000000000000000 0 0 1

[21] .debug_pubtypes PROGBITS 0000000000000000 004dac56

0000000000001103 0000000000000000 0 0 1

[22] .debug_frame PROGBITS 0000000000000000 004dbd60

00000000000059c0 0000000000000000 0 0 8

[23] .debug_line PROGBITS 0000000000000000 004e1720

000000000001663e 0000000000000000 0 0 1

[24] .comment PROGBITS 0000000000000000 004f7d5e

0000000000000013 0000000000000001 MS 0 0 1

[25] .symtab SYMTAB 0000000000000000 004f7d78

0000000000001e90 0000000000000018 27 316 8

[26] .shstrtab STRTAB 0000000000000000 004f9c08

0000000000000142 0000000000000000 0 0 1

[27] .strtab STRTAB 0000000000000000 004f9d4a

000000000000337e 0000000000000000 0 0 1

There are 7 program headers, starting at offset 64

Program Headers:

Type Offset VirtAddr PhysAddr

FileSiz MemSiz Flags Align

LOAD 0x0000000000001000 0xffffffff80100000 0x0000000000100000

0x000000000000bb5e 0x000000000000bb5e R E 0x1000

LOAD 0x000000000000d000 0xffffffff8010c000 0x000000000010c000

0x000000000000047b 0x000000000000047b R 0x1000

LOAD 0x000000000000e000 0xffffffff8010d000 0x000000000010d000

0x0000000000400060 0x0000000000400060 RW 0x1000

LOAD 0x000000000040f000 0xffffffff8050e000 0x000000000050e000

0x0000000000000000 0x0000000000002000 RW 0x1000

LOAD 0x000000000040f000 0xffffffff80510000 0x0000000000510000

0x0000000000000000 0x0000000000001000 R 0x1000

LOAD 0x000000000040f000 0xffffffff80511000 0x0000000000511000

0x0000000000000000 0x0000000000005000 RW 0x1000

LOAD 0x000000000040f000 0xffffffff80516000 0x0000000000516000

0x0000000000000000 0x0000000000001000 R 0x1000

Section to Segment mapping:

Segment Sections...

00 .text .ltext.unlikely. .ltext.memcpy .ltext.memset

01 .rodata .rodata.cst16 .rodata.cst4 .rodata.str1.1 .lrodata

02 .data

03 .bss

04 __stackguard_upper

05 __stack

06 __stackguard_lower

Summary

In this chapter, we mapped the direct map region and the kernel image region as Ymir's memory map. As a result, the page tables provided by UEFI are no longer needed and can be safely freed. Combined with the previous chapters, this completes the replacement of all data structures originally provided by UEFI. We can now use memory regions marked as BootServiceData in the UEFI memory map. In the next chapter, we’ll implement Zig’s @panic() handler to make future debugging a bit easier.

Strictly speaking, Ymir knows exactly where the kernel is loaded because it specifies the load address. What remains unknown are the size of the loaded image and, in cases where segments with different page attributes are loaded separately, the sizes and addresses of each individual segment.

The original Ymir enables PCID.