Virtualizing MSR

This chapter covers MSR virtualization. You can arbitrarily set the MSR values that'll be presented to the guest, and conversely, the host can modify values the guest attempts to write to MSRs. Additionally, MSR values are properly saved and restored during VM Entry and VM Exit.

important

The source code for this branch is in whiz-vmm-msr branch.

Table of Contents

VM Exit Handler

When the guest tries to execute RDMSR or WRMSR instructions, a VM Exit may occur. Whether a VM Exit happens is controlled by the MSR Bitmaps in the VMCS Execution Control category. MSR Bitmaps are bitmaps mapped to MSR addresses, and if an RDMSR or WRMSR is executed on an MSR with a bit set to 1, a VM Exit occurs. Operations on MSRs with bits set to 0 do not trigger a VM Exit. Additionally, disabling MSR Bitmaps causes all RDMSR and WRMSR instructions on any MSR to trigger a VM Exit.

In this series, MSR Bitmaps are disabled so that all RDMSR and WRMSR instructions on all MSRs trigger a VM Exit:

fn setupExecCtrls(vcpu: *Vcpu, _: Allocator) VmxError!void {

...

ppb_exec_ctrl.use_msr_bitmap = false;

...

}

RDMSR causes a VM Exit with Exit Reason 31, and WRMSR with 32. Let's add the switch case for each instruction:

fn handleExit(self: *Self, exit_info: vmx.ExitInfo) VmxError!void {

switch (exit_info.basic_reason) {

...

.rdmsr => {

try msr.handleRdmsrExit(self);

try self.stepNextInst();

},

.wrmsr => {

try msr.handleWrmsrExit(self);

try self.stepNextInst();

},

}

...

}

Saving and Restoring MSR

Before implementing the VM Exit handler for MSR access, let's ensure MSR values are saved and restored during VM Entry and VM Exit. Currently, except for a few MSRs, most of them are shared between the guest and host.

MSRs Automatically Saved and Restored

The following guest MSRs are automatically loaded from their respective locations during VM Entry:

| MSR | Condition | Source of Load |

|---|---|---|

IA32_DEBUGCTL | load debug controls in VMCS VM-Entry Control is enabled | Guest-State |

IA32_SYSENTER_CS | (Unconditional) | VMCS Guest-State |

IA32_SYSENTER_ESP | (Unconditional) | VMCS Guest-State |

IA32_SYSENTER_EIP | (Unconditional) | VMCS Guest-State |

IA32_FSBASE | (Unconditional) | FS.Base in Guest-State |

IA32_GSBASE | (Unconditional) | GS.Base in Guest-State |

IA32_PERF_GLOBAL_CTRL | load IA32_PERF_GLOBAL_CTRL in VMCS VM-Entry Control is enabled | Guest-State |

IA32_PAT | load IA32_PAT in VMCS VM-Entry Control is enabled | Guest-State |

IA32_EFER | load IA32_EFER in VMCS VM-Entry Control is enabled | Guest-State |

IA32_BNDCFGS | load IA32_BNDCFGS in VMCS VM-Entry Control is enabled | Guest-State |

IA32_RTIT_CTL | load IA32_RTIT_CTL in VMCS VM-Entry Control is enabled | Guest-State |

IA32_S_CET | load CET in VMCS VM-Entry Control is enabled | Guest-State |

IA32_INTERRUPT_SSP_TABLE_ADDR | load CET in VMCS VM-Entry Control is enabled | Guest-State |

IA32_LBR_CTRL | load IA32_LBR_CTRL in VMCS VM-Entry Control is enabled | Guest-State |

IA32_PKRS | load PKRS in VMCS VM-Entry Control is enabled | Guest-State |

The following host MSRs are automatically loaded from their respective locations during VM Exit:

| MSR | Condition | Source of Load |

|---|---|---|

IA32_DEBUGCTL | (Unconditional) | Cleared to 0 |

IA32_SYSENTER_CS | (Unconditional) | VMCS Host-State |

IA32_SYSENTER_ESP | (Unconditional) | VMCS Host-State |

IA32_SYSENTER_EIP | (Unconditional) | VMCS Host-State |

IA32_FSBASE | (Unconditional) | FS.Base in Host-State |

IA32_GSBASE | (Unconditional) | GS.Base in Host-State |

IA32_PERF_GLOBAL_CTRL | load IA32_PERF_GLOBAL_CTRL in VMCS VM-Exit Control is enabled | Host-State |

IA32_PAT | load IA32_PAT in VMCS VM-Exit Control is enabled | Host-State |

IA32_EFER | load IA32_EFER in VMCS VM-Exit Control is enabled | Host-State |

IA32_BNDCFGS | clear IA32_BNDCFGS in VMCS VM-Exit Control is enabled | Cleared to 0 |

IA32_RTIT_CTL | clear IA32_RTIT_CTL in VMCS VM-Exit Control is enabled | Cleared to 0 |

IA32_S_CET | load CET in VMCS VM-Exit Control is enabled | Host-State |

IA32_INTERRUPT_SSP_TABLE_ADDR | load CET in VMCS VM-Exit Control is enabled | Host-State |

IA32_PKRS | load PKRS in VMCS VM-Exit Control is enabled | Host-State |

The following guest MSRs are automatically saved to their respective locations during VM Exit:

| MSR | Condition | Destination of Save |

|---|---|---|

IA32_DEBUGCTL | save debug controls in VMCS VM-Exit Control is enabled | Guest-State |

IA32_PAT | save IA32_PAT in VMCS VM-Exit Control is enabled | Host-State |

IA32_EFER | save IA32_EFER in VMCS VM-Exit Control is enabled | Host-State |

IA32_BNDCFGS | load IA32_BNDCFGS in VMCS VM-Exit Control is enabled | Host-State |

IA32_RTIT_CTL | load IA32_RTIT_CTL in VMCS VM-Exit Control is enabled | Host-State |

IA32_S_CET | load CET in VMCS VM-Exit Control is enabled | Host-State |

IA32_INTERRUPT_SSP_TABLE_ADDR | load CET in VMCS VM-Exit Control is enabled | Host-State |

IA32_LBR_CTRL | load IA32_LBR_CTRL in VMCS VM-Exit Control is enabled | Host-State |

IA32_PKRS | load PKRS in VMCS VM-Exit Control is enabled | Host-State |

IA32_PERF_GLOBAL_CTRL | save IA32_PERF_GLOBAL_CTRL in VMCS VM-Exit Control is enabled | Host-State |

These MSRs are automatically saved and loaded during VM Entry and VM Exit. Since the values are stored in the VMCS, there is no risk of sharing between host and guest. Some MSRs require enabling settings in the VM-Exit/-Entry Controls. In Ymir, auto loading is enabled for the following MSRs. Among the MSRs listed below, those not listed below are not used in Ymir, so they do not need to be virtualized (the guest's MSRs will remain visible while in the host):

IA32_PATIA32_EFER

fn setupExitCtrls(_: *Vcpu) VmxError!void {

...

exit_ctrl.load_ia32_efer = true;

exit_ctrl.save_ia32_efer = true;

exit_ctrl.load_ia32_pat = true;

exit_ctrl.save_ia32_pat = true;

...

}

fn setupEntryCtrls(_: *Vcpu) VmxError!void {

...

entry_ctrl.load_ia32_efer = true;

entry_ctrl.load_ia32_pat = true;

...

}

MSR Area

MSRs other than those listed above are not saved or restored during VM Entry/VM Exit unless explicitly configured. In Ymir, the following MSRs will be additionally saved and restored:

IA32_TSC_AUXIA32_STARIA32_LSTARIA32_CSTARIA32_FMASKIA32_KERNEL_GS_BASE

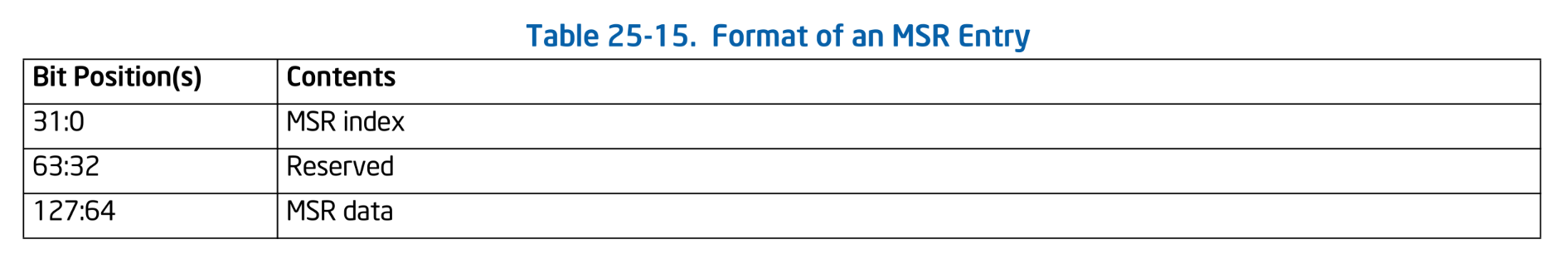

MSRs loaded and saved during VM Exit/Entry are stored in an area called the MSR Area. The MSR Area is an array of 128-bit entries called MSR entry. Each MSR entry has the following structure and holds the data of the MSR specified by index:

Format of an MSR Entry. SDM Vol.3C Table 25-15.

Format of an MSR Entry. SDM Vol.3C Table 25-15.

There're the following three categories of MSR Area:

- VM-Entry MSR-Load Area:

- VM-Exit MSR-Store Area: Area for saving guest MSRs during VM Exit

- VM-Exit MSR-Load Area: Area for loading host MSRs during VM Exit

There is no area for saving host MSRs during VM Entry. Presumably (and understandably), since the host is virtualization-aware, it is expected to save them manually before VM Entry.

Define a structure representing the MSR Area:

pub const ShadowMsr = struct {

/// Maximum number of MSR entries in a page.

const max_num_ents = 512;

/// MSR entries.

ents: []SavedMsr,

/// Number of registered MSR entries.

num_ents: usize = 0,

/// MSR Entry.

pub const SavedMsr = packed struct(u128) {

index: u32,

reserved: u32 = 0,

data: u64,

};

/// Initialize saved MSR page.

pub fn init(allocator: Allocator) !ShadowMsr {

const ents = try allocator.alloc(SavedMsr, max_num_ents);

@memset(ents, std.mem.zeroes(SavedMsr));

return ShadowMsr{

.ents = ents,

};

}

/// Register or update MSR entry.

pub fn set(self: *ShadowMsr, index: am.Msr, data: u64) void {

return self.setByIndex(@intFromEnum(index), data);

}

/// Register or update MSR entry indexed by `index`.

pub fn setByIndex(self: *ShadowMsr, index: u32, data: u64) void {

for (0..self.num_ents) |i| {

if (self.ents[i].index == index) {

self.ents[i].data = data;

return;

}

}

self.ents[self.num_ents] = SavedMsr{ .index = index, .data = data };

self.num_ents += 1;

if (self.num_ents > max_num_ents) {

@panic("Too many MSR entries registered.");

}

}

/// Get the saved MSRs.

pub fn savedEnts(self: *ShadowMsr) []SavedMsr {

return self.ents[0..self.num_ents];

}

/// Find the saved MSR entry.

pub fn find(self: *ShadowMsr, index: am.Msr) ?*SavedMsr {

const index_num = @intFromEnum(index);

for (0..self.num_ents) |i| {

if (self.ents[i].index == index_num) {

return &self.ents[i];

}

}

return null;

}

/// Get the host physical address of the MSR page.

pub fn phys(self: *ShadowMsr) u64 {

return mem.virt2phys(self.ents.ptr);

}

};

ShadowMsr holds an array of MSR entries and provides an API to manipulate the registered MSRs. Among the three MSR Areas, member variables representing the host (Load) and guest (Store+Load) areas are added to Vm:

pub const Vcpu = struct {

host_msr: msr.ShadowMsr = undefined,

guest_msr: msr.ShadowMsr = undefined,

...

}

During VMCS initialization (setupVmcs()), initialize the guest and host MSR Areas. The physical addresses of the MSR Areas are set in the VM-Exit Controls and VM-Entry Controls as MSR-load address and MSR-store address. The number of MSRs registered in the MSR Areas is set in MSR-load count and MSR-store count. Only the first count entries of the registered MSR Area are loaded and saved during VM Exit and VM Entry. The host MSRs are registered with their current values as is. The guest MSRs are initialized to all zeros:

fn registerMsrs(vcpu: *Vcpu, allocator: Allocator) !void {

vcpu.host_msr = try msr.ShadowMsr.init(allocator);

vcpu.guest_msr = try msr.ShadowMsr.init(allocator);

const hm = &vcpu.host_msr;

const gm = &vcpu.guest_msr;

// Host MSRs.

hm.set(.tsc_aux, am.readMsr(.tsc_aux));

hm.set(.star, am.readMsr(.star));

hm.set(.lstar, am.readMsr(.lstar));

hm.set(.cstar, am.readMsr(.cstar));

hm.set(.fmask, am.readMsr(.fmask));

hm.set(.kernel_gs_base, am.readMsr(.kernel_gs_base));

// Guest MSRs.

gm.set(.tsc_aux, 0);

gm.set(.star, 0);

gm.set(.lstar, 0);

gm.set(.cstar, 0);

gm.set(.fmask, 0);

gm.set(.kernel_gs_base, 0);

// Init MSR data in VMCS.

try vmwrite(vmcs.ctrl.exit_msr_load_address, hm.phys());

try vmwrite(vmcs.ctrl.exit_msr_store_address, gm.phys());

try vmwrite(vmcs.ctrl.entry_msr_load_address, gm.phys());

}

The VM-Exit MSR-Load Area (the area loaded into the host's MSRs during VM Exit) must be updated before every VM Entry. Otherwise, the initially set values would be used indefinitely. Call the following function at the beginning of the while loop inside the VM Entry loop loop():

fn updateMsrs(vcpu: *Vcpu) VmxError!void {

// Save host MSRs.

for (vcpu.host_msr.savedEnts()) |ent| {

vcpu.host_msr.setByIndex(ent.index, am.readMsr(@enumFromInt(ent.index)));

}

// Update MSR counts.

try vmwrite(vmcs.ctrl.vexit_msr_load_count, vcpu.host_msr.num_ents);

try vmwrite(vmcs.ctrl.exit_msr_store_count, vcpu.guest_msr.num_ents);

try vmwrite(vmcs.ctrl.entry_msr_load_count, vcpu.guest_msr.num_ents);

}

In this series, the number of MSRs registered in the MSR Area does not change. As will be covered later, if the guest attempts a WRMSR on an MSR not registered in the MSR Area, it will cause an abort. Therefore, there is no need to update the MSR counts in practice. This design is intended to accommodate potential future support for dynamically adding MSRs to the MSR Area.

With this, the setup of MSRs registered in the MSR Area and those automatically saved and restored is complete. The remaining task is to implement the handling of guest RDMSR and WRMSR instructions to read and write the values of MSRs registered in the MSR Area.

RDMSR Handler

Implement the handler for RDMSR. First, prepare a helper function to store the RDMSR result into the guest registers. Upper 32 bits of the RDMSR result is stored in RDX and the lower 32 bits in RAX. There are two patterns to present the MSR value to the guest:

- Returns a value stored in VMCS: when a MSR is saved and loaded automatically

- Returns a value registered in the MSR Area: others

Use setRetVal() for the former, and shadowRead() for the latter:

/// Concatnate two 32-bit values into a 64-bit value.

fn concat(r1: u64, r2: u64) u64 {

return ((r1 & 0xFFFF_FFFF) << 32) | (r2 & 0xFFFF_FFFF);

}

/// Set the 64-bit return value to the guest registers.

fn setRetVal(vcpu: *Vcpu, val: u64) void {

const regs = &vcpu.guest_regs;

@as(*u32, @ptrCast(®s.rdx)).* = @as(u32, @truncate(val >> 32));

@as(*u32, @ptrCast(®s.rax)).* = @as(u32, @truncate(val));

}

/// Read from the MSR Area.

fn shadowRead(vcpu: *Vcpu, msr_kind: am.Msr) void {

if (vcpu.guest_msr.find(msr_kind)) |msr| {

setRetVal(vcpu, msr.data);

} else {

log.err("RDMSR: MSR is not registered: {s}", .{@tagName(msr_kind)});

vcpu.abort();

}

}

With this, let's implement the RDMSR handler:

pub fn handleRdmsrExit(vcpu: *Vcpu) VmxError!void {

const guest_regs = &vcpu.guest_regs;

const msr_kind: am.Msr = @enumFromInt(guest_regs.rcx);

switch (msr_kind) {

.apic_base => setRetVal(vcpu, std.math.maxInt(u64)), // 無効

.efer => setRetVal(vcpu, try vmx.vmread(vmcs.guest.efer)),

.fs_base => setRetVal(vcpu, try vmx.vmread(vmcs.guest.fs_base)),

.gs_base => setRetVal(vcpu, try vmx.vmread(vmcs.guest.gs_base)),

.kernel_gs_base => shadowRead(vcpu, msr_kind),

else => {

log.err("Unhandled RDMSR: {?}", .{msr_kind});

vcpu.abort();

},

}

}

RDMSR on an unsupported MSR (else) causes an abort. The set of supported MSRs has been determined empirically. Initially, a switch with only else was used to run the guest, and MSRs were added one by one until Linux successfully booted. It's surprising how few MSRs are actually required for Linux to run. Speaking of surprises, as I write this section, it's November—the season when chestnuts are in peak flavor.

WRMSR Handler

Let's create a helper function as we did for RDMSR:

fn shadowWrite(vcpu: *Vcpu, msr_kind: am.Msr) void {

const regs = &vcpu.guest_regs;

if (vcpu.guest_msr.find(msr_kind)) |_| {

vcpu.guest_msr.set(msr_kind, concat(regs.rdx, regs.rax));

} else {

log.err("WRMSR: MSR is not registered: {s}", .{@tagName(msr_kind)});

vcpu.abort();

}

}

Here's a WRMSR handler:

pub fn handleWrmsrExit(vcpu: *Vcpu) VmxError!void {

const regs = &vcpu.guest_regs;

const value = concat(regs.rdx, regs.rax);

const msr_kind: am.Msr = @enumFromInt(regs.rcx);

switch (msr_kind) {

.star,

.lstar,

.cstar,

.tsc_aux,

.fmask,

.kernel_gs_base,

=> shadowWrite(vcpu, msr_kind),

.sysenter_cs => try vmx.vmwrite(vmcs.guest.sysenter_cs, value),

.sysenter_eip => try vmx.vmwrite(vmcs.guest.sysenter_eip, value),

.sysenter_esp => try vmx.vmwrite(vmcs.guest.sysenter_esp, value),

.efer => try vmx.vmwrite(vmcs.guest.efer, value),

.gs_base => try vmx.vmwrite(vmcs.guest.gs_base, value),

.fs_base => try vmx.vmwrite(vmcs.guest.fs_base, value),

else => {

log.err("Unhandled WRMSR: {?}", .{msr_kind});

vcpu.abort();

},

}

}

There are more MSRs that need to be supported for WRMSR compared to RDMSR. This makes sense—MSRs like STAR, LSTAR, and CSTAR (used as syscall entry points) are typically written to but not read.

Summary

In this chapter, we configured the MSR Area to ensure that guest and host MSRs are properly saved and restored during VM Entry and VM Exit. This separates the MSR space between the host and guest. We also implemented RDMSR and WRMSR handlers to read and write values registered in the VMCS or MSR Area. With this, MSR virtualization is complete.

As has become customary, let's run the guest at the end:

[INFO ] main | Entered VMX root operation.

[INFO ] vmx | Guest memory region: 0x0000000000000000 - 0x0000000006400000

[INFO ] vmx | Guest kernel code offset: 0x0000000000005000

[DEBUG] ept | EPT Level4 Table @ FFFF88800000E000

[INFO ] vmx | Guest memory is mapped: HVA=0xFFFF888000A00000 (size=0x6400000)

[INFO ] main | Setup guest memory.

[INFO ] main | Starting the virtual machine...

No EFI environment detected.

early console in extract_kernel

input_data: 0x0000000002d582b9

input_len: 0x0000000000c7032c

output: 0x0000000001000000

output_len: 0x000000000297e75c

kernel_total_size: 0x0000000002630000

needed_size: 0x0000000002a00000

trampoline_32bit: 0x0000000000000000

KASLR disabled: 'nokaslr' on cmdline.

Decompressing Linux... Parsing ELF... No relocation needed... done.

Booting the kernel (entry_offset: 0x0000000000000000).

[ERROR] vcpu | Unhandled VM-exit: reason=arch.x86.vmx.common.ExitReason.triple_fault

[ERROR] vcpu | === vCPU Information ===

[ERROR] vcpu | [Guest State]

[ERROR] vcpu | RIP: 0xFFFFFFFF8102E0B9

[ERROR] vcpu | RSP: 0x0000000002A03F58

[ERROR] vcpu | RAX: 0x00000000032C8000

[ERROR] vcpu | RBX: 0x0000000000000800

[ERROR] vcpu | RCX: 0x0000000000000030

[ERROR] vcpu | RDX: 0x0000000000001060

[ERROR] vcpu | RSI: 0x00000000000001E3

[ERROR] vcpu | RDI: 0x000000000000001C

[ERROR] vcpu | RBP: 0x0000000001000000

[ERROR] vcpu | R8 : 0x000000000000001C

[ERROR] vcpu | R9 : 0x0000000000000008

[ERROR] vcpu | R10: 0x00000000032CB000

[ERROR] vcpu | R11: 0x000000000000001B

[ERROR] vcpu | R12: 0x0000000000000000

[ERROR] vcpu | R13: 0x0000000000000000

[ERROR] vcpu | R14: 0x0000000000000000

[ERROR] vcpu | R15: 0x0000000000010000

[ERROR] vcpu | CR0: 0x0000000080050033

[ERROR] vcpu | CR3: 0x00000000032C8000

[ERROR] vcpu | CR4: 0x0000000000002020

[ERROR] vcpu | EFER:0x0000000000000500

[ERROR] vcpu | CS : 0x0010 0x0000000000000000 0xFFFFFFFF

Incredible! The guest has finally started printing logs! Although the main kernel hasn't booted yet, logs are being printed because we passed earlyprintk=serial on the command line back in the Linux Boot Protocol chapter1. As you can see from the message 'nokaslr' on cmdline, the command line specified in BootParams is correctly passed to the guest.

The message Decompressing Linux... is printed from extract_kernel(). This function is called from relocated() in head_64.S. It decompresses the compressed kernel and places it in memory, preparing to transfer control. The decompressed kernel is placed at the address specified by BootParams (i.e., 0x10_0000). Immediately after extract_kernel(), control jumps to this address, transferring execution to startup_64() in the other head_64.S (not the one in compressed/).

The triple fault that eventually occurs is triggered when attempting to set the PSE bit in CR4:

ffffffff8102e0a8 <common_startup_64>:

ffffffff8102e0a8: ba 20 10 00 00 mov edx,0x1020

ffffffff8102e0ad: 83 ca 40 or edx,0x40

ffffffff8102e0b0: 0f 20 e1 mov rcx,cr4

ffffffff8102e0b3: 21 d1 and ecx,edx

ffffffff8102e0b5: 0f ba e9 04 bts ecx,0x4

ffffffff8102e0b9: 0f 22 e1 mov cr4,rcx

ffffffff8102e0bc: 0f ba e9 07 bts ecx,0x7

This MOV to CR4 clears the CR4.VMXE bit. If a MOV to CR4 does not trigger a VM Exit but results in a value that violates the constraints defined by IA32_VMX_CR4_FIXED0 or IA32_VMX_CR4_FIXED1, the guest will receive a #GP exception (not a VM Exit)2. Since the guest has not yet set up an interrupt handler, this #GP leads directly to a triple fault. So next time, we'll implement proper handling of CR accesses made by the guest.

Since serial console virtualization is not yet implemented, the guest is accessing the serial port directly. For now, let's allow this behavior.

SDM Vol.3C 26.3 CHANGES TO INSTRUCTION BEHAVIOR IN VMX NON-ROOT OPERATION